Abstract

We live in a multisensory world and one of the challenges the brain is faced with is deciding what information belongs together. Our ability to make assumptions about the relatedness of multisensory stimuli is partly based on their temporal and spatial relationships. Stimuli that are proximal in time and space are likely to be bound together by the brain and ascribed to a common external event. Using this framework we can describe multisensory processes in the context of spatial and temporal filters or windows that compute the probability of the relatedness of stimuli. Whereas numerous studies have examined the characteristics of these multisensory filters in adults and discrepancies in window size have been reported between infants and adults, virtually nothing is known about multisensory temporal processing in childhood. To examine this, we compared the ability of 10 and 11 year olds and adults to detect audiovisual temporal asynchrony. Findings revealed striking and asymmetric age-related differences. Whereas children were able to identify asynchrony as readily as adults when visual stimuli preceded auditory cues, significant group differences were identified at moderately long stimulus onset asynchronies (150–350 ms) where the auditory stimulus was first. Results suggest that changes in audiovisual temporal perception extend beyond the first decade of life. In addition to furthering our understanding of basic multisensory developmental processes, these findings have implications on disorders (e.g., autism, dyslexia) in which emerging evidence suggests alterations in multisensory temporal function.

Keywords: Intersensory, Auditory, Visual, Development, Simultaneity, Asynchrony

1. Introduction

Many of our everyday experiences are multisensory. For example, in a typical communicative exchange, we hear the words that are spoken and see the corresponding visual information in the form of an individual’s lips moving. To make sense of the wealth of sensory information available at any given moment, our brains have evolved specialized mechanisms to extract meaningful information both within and across the different sensory systems. For multisensory processes, two of the most salient pieces of information used to determine the relatedness of objects or events are their spatial and temporal proximity, and numerous studies in adults have focused on defining how manipulations of these relations alter the magnitude of multisensory interactions. Despite this wealth of data, surprisingly little is known about how these processes mature during postnatal life. Based on the premise that judgments regarding the interrelatedness of multisensory stimuli are modified with age, this study explores the development of multisensory processing by contrasting audiovisual temporal asynchrony detection abilities in younger and older participants.

1.1. Temporal aspects of multisensory processing

The benefits of the combined use of information from several senses have been revealed in numerous studies, and include enhancements in signal detection, speeded motor responses, and improved speech in noise performance (Frens, Van Opstal, & Van der Willigen, 1995; Grant & Seitz, 2000; Hughes, Reuter-Lorenz, Nozawa, & Fendrich, 1994; Lovelace, Stein, & Wallace, 2003; Sumby & Pollack, 1954). In addition, a host of psychophysical illusions reveal the continual and compelling interplay between the senses. For example, pairing distinct and discordant auditory and visual speech cues (e.g., an auditory /ba/ with a visual /ga/) can result in report of an intermediary and novel percept (e.g., /tha/ or /da/) (McGurk & MacDonald, 1976). Illusory percepts can also be generated with highly reduced multisensory stimuli, as evidenced by the fact that the presentation of a single visual flash accompanied by two tone pips can result in report of multiple flashes (Shams, Kamitani, & Shimojo, 2000).

Where examined, these behavioral benefits and perceptual illusions have been shown to be critically dependent on the temporal and spatial structure of the paired stimuli, with the strength of multisensory interactions declining as a function of increasing spatial and/or temporal disparity. In the temporal realm, numerous studies have suggested the presence of a temporal window of multisensory integration, or a range of interstimulus intervals over which multisensory stimuli are highly likely to be bound into a single perceptual event (Dixon & Spitz, 1980; Shams, Kamitani, & Shimojo, 2002; van Wassenhove, Grant, & Poeppel, 2007). The boundaries of these temporal binding processes have been delineated by quantifying the perseverance and magnitude of multisensory effects (e.g., speeded motor reaction times, psychophysical illusions, reports of simultaneity) as the time interval between the presentation of the constituent multisensory stimuli is lengthened (Colonius & Diederich, 2004; Dixon & Spitz, 1980; Koppen & Spence, 2007; Munhall, Gribble, Sacco, & Ward, 1996; Shams et al., 2002; van Wassenhove et al., 2007). Studies have indicated that multisensory interactions are reduced with increasing asynchrony and that the rate of decay of integrative effects is asymmetric; the slope of the left side of the window is steeper indicating that asynchrony is more readily detected for stimulus pairings when the auditory cue is presented first.

In the search for the neural correlates for these multisensory behavioral and perceptual phenomena, human electrophysiological and imaging studies have revealed a similar temporal dependency. Synchronous presentation of auditory and visual speech produces decreases in the latency of early cortical auditory evoked potentials (van Wassenhove, Grant, & Poeppel, 2005). Furthermore, combined audiovisual stimuli produce greater gamma band oscillatory activity when presented at smaller audiovisual stimulus onset asynchronies (SOAs) (Senkowski, Talsma, Grigutsch, Herrmann, & Woldorff, 2007), which is suggested to be reflective of multisensory binding (Schneider, Debener, Oostenveld, & Engel, 2008; Senkowski, Schneider, Tandler, & Engel, 2009). Imaging studies have identified a distributed network of cortical and subcortical regions involved in multisensory integration whose activation profiles reveal a strong temporal dependency (Bushara, Grafman, & Hallett, 2001; Dhamala, Assisi, Jirsa, Steinberg, & Kelso, 2007; Noesselt et al., 2007; Powers, Hevey, & Wallace, Unpublished results). For example, blood oxygenation level dependent (BOLD) signals, an indirect measure of neuronal activity revealed in fMRI studies, have been found to be increased in the superior temporal sulcus (STS) and auditory and visual cortices in response to coincident audiovisual stimuli (Noesselt et al., 2007). Conversely, BOLD decreases have been observed in these areas in response to asynchronous stimulus pairs (Noesselt et al., 2007).

1.2. Age effects on multisensory task performance

While the bulk of human multisensory research has focused on adults, the development of various aspects of multisensory integration has been examined in human infants, largely using paradigms that track the duration of gaze maintenance (i.e., preferential looking). Behavioral work has indicated that infants as young as four months of age show the ability to detect tempo and synchrony, and that temporal invariants (amodal cues – available to both auditory and visual senses) assist in discerning what stimuli are produced by a unitary event (Bahrick, 1983, 1987, 1988; Lewkowicz, 1986, 1992, 1996, 2000; Spelke, 1979). Furthermore, Lewkowicz (1996) has shown that when compared to adults, infants have a larger temporal window for binding asynchronous visual and auditory stimuli, suggesting that they perceptually fuse temporally disparate multisensory stimulus pairs that are not fused in adults.

While no studies have examined multisensory performance between older children and adults on temporally based tasks, work has reported immature multisensory processing abilities in pre-adolescents and adolescents relative to adults on a variety of non-temporally based tasks in the audiovisual realm and in other sensory modalities (Barutchu et al., 2010; Gori, Del Viva, Sandini, & Burr, 2008; Massaro, 1984; McGurk & MacDonald, 1976; Tremblay et al., 2007). Studies examining the McGurk illusion in children and adults reported fewer perceived illusions (instances of multisensory integration) in younger participants and found that when responses were dominated by a single modality (i.e., unfused trials), children relied more heavily on the auditory input (McGurk & MacDonald, 1976; Massaro, 1984; Tremblay et al., 2007). While the influence of vision on audiovisual processing appears to increase with age for speech, performance on a measure employing basic stimuli (i.e., flash beep illusion) reportedly did not change with age (Tremblay et al., 2007). Like the speech studies, work in other sensory modalities (i.e., visual–haptic, visual–proprioception) has identified compelling immaturities in multisensory integration (Gori et al., 2008; Nardini, Jones, Bedford, & Braddick, 2008). Performance differences were noted in children 5–10 years of age and adults on a visual and haptic (active touch) size discrimination task (Gori et al., 2008). Whereas adults and older children were found to weight visual information more heavily in making size estimations, the judgments of the youngest children were more influenced by the haptic information. This and other data suggest that middle childhood (i.e., 8–10 years) represents an important transitional period for the maturation of multisensory processing. For a brief review on age-related differences in statistical optimality of multisensory integration and more evidence for delays in the emergence of interactions, see Ernst (2008).

1.3. Characterization of multisensory temporal processing in children: rationale for the current study

As highlighted, research has identified age-related differences in multisensory abilities and has established the concept of a plastic multisensory temporal binding window that changes with ontogeny. However, no studies have systematically documented differences in the temporal aspects of audiovisual integration between children and adults. The goal of the current study was to characterize multisensory temporal function in children and adults using an audiovisual simultaneity judgment task previously used by our group to assess the multisensory temporal binding window in adults (as well as its malleability, see Powers, Hillock, & Wallace, 2009). This measure enables us to directly compare multisensory temporal function in younger and older participants.

2. Methods

2.1. Subjects

Typically developing children (n = 13; 11 males; mean age = 10.7 years) and adults (n = 14; 6 males; mean age = 26.6 years) were recruited to participate in the study. All participants and parents/guardians of minors were consented and assented prior to study participation in accordance with an approved protocol of the Vanderbilt Institutional Review Board (IRB). All subjects had normal hearing (pure tone thresholds less than 25 dB HL at octave frequencies from 250 to 8000 Hz) and good visual acuity with or without correction (Snellen criterion of 20/25 – 2 [2 or fewer errors per eye]) and average or above-average intelligence. All children completed the Kaufmann Brief Intelligence Test, second edition (K-BIT II), which provides an estimate of intellectual ability. No formal intelligence screening was completed on adults. The adult group was comprised of college educated individuals and undergraduate students at Vanderbilt University with no history of learning difficulties (per self report). Hearing and vision screenings were completed at the start of the session and intelligence testing followed completion of the multisensory task.

2.2. Stimuli and experimental design

A point of subjective simultaneity (PSS) judgment task (adapted from Fujisaki, Shimojo, Kashino, & Nishida, 2004) was administered to each participant twice (i.e., assessments 1 and 2). Subjects were seated in a quiet, dimly lit room approximately 48 cm from a high refresh-rate computer monitor (ViewSonic E70fB, 120 Hz). A white crosshair fixation marker (1 cm × 1 cm) was situated in the center of a black background on the computer screen for the duration of the experiment. Auditory (8 ms duration, 1800 Hz tone burst, 99.2 peak dB SPL [unweighted]) and visual (8 ms duration, white ring flash subtending 15° of space, outer diameter = 12.4 cm, inner diameter = 6.0 cm, area = 369.8 cm2) stimulus pairs were presented in a randomly interleaved fashion at the following visual–auditory SOAs: 0, ±50, ±100, ±150, ±200, ±250, ±350 and ±450 ms (Fig. 1). The auditory stimulus was presented via Etymotic Research ER-3A insert earphones and auditory and visual stimulus durations and SOAs were verified externally with an oscilloscope. Whereas positive values represent visual stimuli leading auditory stimuli, negative values represent the opposite. A total of 330 responses were collected during each assessment (22 samples × 15 SOAs). Each new trial was initiated 1 s after the participant logged his/her response to the previous trial. On the infrequent occasion that a participant failed to log his/her response within approximately 5 s of stimulus presentation, he/she was prompted to make a response by the experimenter. Total test time for the multisensory task was approximately 25 min, including breaks. Stimulus delivery and data logging were controlled by E-prime 2.0 (2.0.1.109).

Fig. 1.

Simultaneity Judgment Paradigm. Profile of the temporal relationship between stimuli used in assessments.

Instructions were read aloud for both groups and the task was initiated by the experimenter (see Appendices 1 and 2 for adult and child instructions). For the children, the task objectives were placed in the context of a story that distinguished the auditory–visual communication styles of boy and girl lightning bugs. Following the story, children answered a circumscribed list of questions to ensure proper understanding of the task and the capacity to distinguish between the bug images. Behavioral judgments (i.e., simultaneous or asynchronous) were recorded by pressing buttons labeled with numbers (1 or 4, adults) or lightning bug images (blue [male] or red [female], children) on a response box (Psychology Software Tools Response Box Model 200A). Bug images had characteristics other than color to aid in distinguishing their gender (as screenings did not include testing for color vision deficiency). Responses were counterbalanced across participants such that the buttons associated with simultaneity and non-simultaneity were reversed for half of the subjects. A follow-up experiment in which a novel group of adults (n = 8) were instructed using the story-based technique employed with the children revealed no significant effect of instruction method on responses compared to those of 8 randomly selected adults from our experimental group.

2.3. Data analysis and temporal window derivation

Differences in window size and simultaneity judgment at sampled SOAs were assessed between groups and across assessments using independent samples’ t-tests and repeated-measures analysis of variance (rmANOVA). To correct for dependence among the repeated measures within subjects, Greenhouse–Geisser corrections were performed. Because no significant effect of session was observed for measures of window size (Supplement 1), the data was collapsed across assessments. However, for analysis of within-session effects (e.g., effects resulting from fatigue or task-learning), responses were divided between the first and second halves of the assessments.

The mean probability of simultaneity report (i.e., number of simultaneous responses as a function of total responses) was calculated at each SOA for all participants. Points were fit to create a distribution which served as the basis for determining the size of the temporal binding window. Sigmoids were generated to the discretely sampled points from each half of the distribution (left: −450 to 0 ms, right: 0 to +450 ms) for all subjects. Distributions were produced from a two-by-two matrix comprised of interpolated y values (probability of simultaneity report) at x values (time points) ranging from −600 to +600 ms in 0.1 ms increments. The criterion used to characterize the size of the multisensory temporal binding window for each subject was the width of this distribution at 3/4 maximum.

3. Results

3.1. Defining group differences in window size

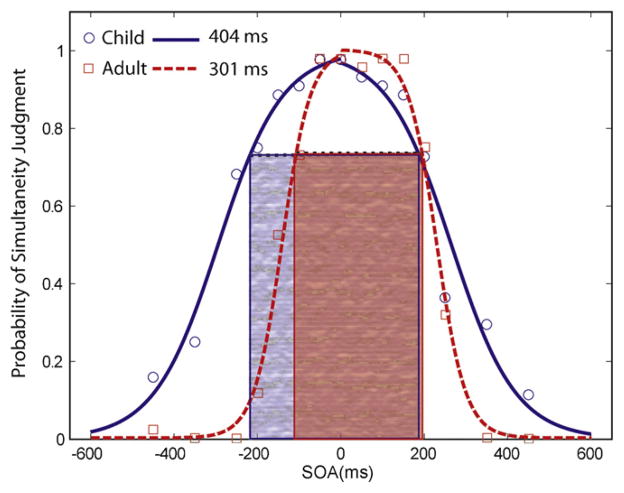

The mean multisensory temporal binding window for the 10 and 11 year old children (413 ms) was found to be significantly wider than that of the adults (299 ms) (t(25) = 3.945, p = 0.001; Table 1 and Fig. 2). Although several children had window sizes comparable to the adult mean, more than a third of the children had windows in excess of 450 ms, a value never seen in adults. In general, children were found to have more symmetric distributions when compared with adults, as illustrated by the comparison in two representative subjects shown in Fig. 3.

Table 1.

Summary table of differences in window size between children and adults (assessments averaged).

| Mean WS (ms) | SEM | t | df | p | |

|---|---|---|---|---|---|

| Child group (n = 13) | 412.762 | 23.155 | 3.945 | 25 | *0.001 |

| Adult group (n = 14) | 298.929 | 17.642 |

Fig. 2.

Left: Bar graph displays group difference in multisensory temporal window size (i.e., distribution width at 3/4 maximum) for children (C) and adults (A). Error bars represent ±one standard error of the mean (SEM). Middle/Right: Scatterplot of individual window sizes for each child (circles) and adult (squares). Solid lines represent mean values and dotted lines denote the area encompassing ±1 SEM.

Fig. 3.

Graph displaying windows for representative child and adult subjects. Note that the discrepancy in window size between subjects is primarily comprised of differences in the left side of the distribution.

3.2. Defining group differences in the probability of simultaneity judgment

Although the comparison of mean window size provided a global measure of group differences in the multisensory temporal binding window, additional analyses were performed to further delineate the temporal structure and nature of the age-related differences in participants’ reports of simultaneity/asynchrony. A repeated-measures ANOVA with a within-subject factor of SOA and a between-subject factor of group revealed a significant main effect of group and SOA and an SOA by group interaction, p < 0.01, all tests (Table 2). These results indicate group differences in simultaneity judgment that differ across SOA conditions, or distinctions between groups at select SOAs.

Table 2.

Summary table of significant effects from a repeated-measures ANOVA using a repeated-measures factor of SOA and a between subject factor of group (assessments averaged).

| Factor | F | df factor | df error | p |

|---|---|---|---|---|

| SOA | 154.691 | 3.502 | 87.548 | *0.000 |

| Group | 15.120 | 1 | 25 | *0.001 |

| SOA × group | 4.094 | 3.502 | 87.548 | *0.006 |

Follow-up contrasts (i.e., independent samples’ t-tests) were performed to identify which SOAs contributed to these group differences. A Bonferroni correction was applied to reduce the risk of elevation of familywise error due to the number of comparisons (i.e., 15), resulting in a more stringent criterion value for significance (p < 0.0033). Equal variance between groups was assumed for all comparisons unless otherwise noted based on results from Levene’s test for equality of variances. Significant differences in the likelihood of simultaneity report were observed for the following conditions: A350V, A250V, A200V, A150V (Fig. 4, Table 3) – moderate to moderately long SOAs in which the onset of the auditory stimulus preceded the onset of the visual stimulus. A marginally significant difference was noted between groups at the most extreme auditory leading visual SOA (A450V), p = 0.004. In contrast, no differences in simultaneity report were observed in the objectively simultaneous condition, at short stimulus onset asynchronies (e.g., −50 ms, −100 ms) in which the auditory stimulus was leading, or in any of the conditions in which the visual stimulus preceded the auditory stimulus.

Fig. 4.

Grand averaged group data distributions. Children were significantly more likely to report trials as simultaneous at moderate and long SOAs in which the auditory stimulus preceded the ring flash. Error bars represent ±1 SEM, **sig Bonferroni p < 0.0033.

Table 3.

Summary table of SOAs showing significant group differences in probability of simultaneity report (assessments averaged).

| Mean | SEM | Min | Max | t | df | p | |

|---|---|---|---|---|---|---|---|

| A350Va | |||||||

| Child (n = 13) | 0.243 | 0.039 | 0.045 | 0.409 | 3.404 | 20.143 | *0.003 |

| Adult (n = 14) | 0.086 | 0.024 | 0.000 | 0.273 | |||

| A250V | |||||||

| Child (n = 13) | 0.476 | 0.048 | 0.159 | 0.682 | 4.797 | 25 | *0.000 |

| Adult (n = 14) | 0.183 | 0.039 | 0.000 | 0.477 | |||

| A200V | |||||||

| Child (n = 13) | 0.636 | 0.046 | 0.318 | 0.886 | 5.311 | 25 | *0.000 |

| Adult (n = 14) | 0.297 | 0.045 | 0.114 | 0.591 | |||

| A150V | |||||||

| Child (n = 13) | 0.766 | 0.031 | 0.591 | 0.978 | 4.208 | 25 | *0.000 |

| Adult (n = 14) | 0.552 | 0.040 | 0.318 | 0.795 | |||

Equal variances not assumed for the A350V condition only.

3.3. Summary of between and within assessment group effects

In an effort to assess possible fatigue and procedural or perceptual learning effects, subjects’ responses were compared across the two assessments (Fig. 5). These pseudocolor plots serve to reinforce the difference between children and adults in the size of the temporal window (C–A, bottom contrast – note the difference being more pronounced on the left side of the distributions), as well as showing equivalent performance between the two assessments (A1–A2, right contrast).

Fig. 5.

Smoothed pseudocolor plots depicting mean probability of simultaneity judgment for each group on consecutive trials (top left) where warmer colors indicate a higher probability of simultaneity report and cooler colors represent a higher likelihood of asynchrony report. Trials are aligned from first (top) to last (bottom). Contrast plots reveal differences in behavioral report between groups (bottom) and across assessments (top right). For these contrast plots warmer and cooler colors represent positive and negative remainders, respectively; green is neutral or no change. Note the consistency of responses across assessments for both groups (top right) and the group difference in the simultaneity report at negative SOAs (bottom).

Despite the lack of differences across assessments for either group, additional analyses were performed to assay more rapid within-session changes. In this analysis, windows were derived from averaged responses collected during the first half of assessments 1 and 2 and compared to those collected in the latter half of the assessments. In adults, no significant within assessment effects were observed (p > 0.05). In contrast, windows derived from children’s responses on the last half of trials were larger than those from the first half of responses, t(12) = −2.496, p = 0.028 (Table 4). Mean window size in children differed by 79 ms, whereas in adults this difference was only 12 ms.

Table 4.

Summary table showing significant differences in children’s windows derived from averaged responses during the first half of the assessments relative to the latter part of the assessments.

| Mean | SEM | t | df | p | |

|---|---|---|---|---|---|

| A12 first | |||||

| Child (n = 13) | 370.046 | 23.931 | 2.972 | 12 | *0.028 |

| A12 last | |||||

| Child (n = 13) | 449.462 | 34.094 | |||

| A12 first | |||||

| Adult (n = 14) | 291.100 | 17.012 | .788 | 13 | 0.445 |

| A12 first | |||||

| Adult (n = 14) | 279.057 | 23.791 | |||

To determine whether the widened distributions exhibited by children during the latter part of each experiment were driving the group differences, window sizes derived from the earlier and later trials were compared across groups. Results of independent samples’ t tests revealed significant group differences in both the beginning and end of assessments 1 and 2 (Table 5). While these findings suggest that children fatigued more quickly than adults, illustrated by window widening in the latter half of the assessments, the enlargement of window size was still evident in the earliest trials. Thus, while group differences may be more pronounced in the latter portion of the assessments, age effects are apparent throughout the experimental task suggesting that differential fatigue and/or learning effects cannot fully account for group differences.

Table 5.

Summary table of group differences in windows derived from averaged responses from the first half of trials in both assessments and then the latter half of trials.

| Mean | SEM | t | df | p | |

|---|---|---|---|---|---|

| A12 first | |||||

| Child (n = 13) | 370.046 | 23.931 | 2.719 | 25 | *0.012 |

| Adult (n = 14) | 291.100 | 17.012 | |||

| A12 last | |||||

| Child (n = 13) | 449.462 | 34.094 | 4.148 | 25 | *0.000 |

| Adult (n = 14) | 279.057 | 23.791 | |||

4. Discussion

The current study used an audiovisual point of subjective simultaneity task to reveal age-related differences in the temporal window of multisensory integration. Most surprisingly, the results illustrate that even in children ages 10 and 11 the multisensory temporal binding window is far from mature. As discussed below, such a result has important implications in a variety of domains.

The broader temporal binding window in children appears to be driven largely by significant differences in the maturation of the left side of the measured response distributions. Children were significantly more likely to report simultaneity under conditions in which the auditory stimulus led the visual stimulus when compared with adults (but not the converse). In essence, the results show greater symmetry in the overall response distributions in children when compared with adults, and suggest that the narrowing of the left side of the distribution must take place after ages 10–11. The reason for this asymmetrical developmental effect is unknown, but may be related to the physical characteristics of audiovisual stimuli in a naturalistic environment. Under realistic circumstances, for a given stimulus event that generates multisensory energies, visual signals will always lead the associated auditory signals (a result of the fact that visual signals travel at the speed of light whereas auditory signals are delayed in a distance dependent manner). Consequently, extensive experience with audiovisual stimuli during development may drive the meaningful side of the distribution (positive SOAs) to mature more quickly. Alternatively, if windows are symmetric at birth, the left side may become mature later given that more contraction is required to reach the adult state due to the classic window asymmetry noted in adults (steeper slopes for auditory leading SOAs). This still leaves open the question as to why the right side matures so late (or changes at all given its lack of real world relevance).

Mechanistically, we presume that the enlarged temporal binding window may reflect anatomical and/or physiological differences in the circuits that serve to bind together multisensory cues. Several neuroimaging studies in adults have begun to identify important nodes in a network subserving audiovisual simultaneity perception. These include the insula (Calvert, Hansen, Iversen, & Brammer, 2001), inferior parietal lobule (Calvert et al., 2001; Dhamala et al., 2007), superior colliculus (Calvert et al., 2001; Dhamala et al., 2007), posterior superior temporal sulcus (Calvert, Campbell, & Brammer, 2000; Calvert et al., 2001; Dhamala et al., 2007), and unisensory cortices (Noesselt et al., 2007). These latter two have also been shown to exhibit altered BOLD activity and effective connectivity after training on a simultaneity judgment task (Powers et al., Unpublished results). Incomplete myelination of tracts incorporated in the networks involved in multisensory processing may contribute to variable neural propagation times, which could partly account for enlarged windows seen in infants (Lewkowicz, 1996). In addition, maturational differences are likely to be a result, at least in part, to the slow functional maturation of cortical networks involved in these binding processes. Neuroimaging studies have revealed a surprisingly extended time course for the complete maturation of cortical function. Linear increases in white matter volume have been reported throughout the brain from early childhood through the second decade of life (4–20 years of age). Gray matter volume reportedly peaks during adolescence or early adulthood (depending on brain region) and declines thereafter with higher order association cortices (i.e., prefrontal cortex, superior temporal cortex) maturing up to and potentially beyond 20 years of age (Giedd et al., 1999; Gogtay et al., 2004; Pfefferbaum et al., 1994).

Another important determinant in the development of multisensory functioning is the acquisition of increasing amounts of sensory experience. Prior work has suggested a hierarchy of multisensory temporal processing abilities given that different audiovisual capabilities mature at different rates. Thus, while detection of audiovisual synchrony has been observed in infants as young as 4 months, identification of duration-based correspondences (between synchronous audiovisual stimuli) and sensitivity to rate-based manipulations does not emerge until 6 months and 10 months of age, respectively (Lewkowicz & Lickliter, 1994). It has also been speculated that shifts in sensory dominance may contribute to changes in infants’ responsiveness to intersensory incongruence. Discrepancies between the onset of functional hearing (third trimester) and vision (birth) can lead to increased reliance and attention to the more developmentally mature sense, audition, in early life. To that end, while younger (6 month old) infants are exclusively able to detect audiovisual intersensory incongruence when the auditory stimulus or both the auditory and visual stimuli are manipulated, older (10 month old) babies with more visual experience show detection of auditory, visual or audiovisual stimulus manipulations (Lewkowicz & Lickliter, 1994). Thus, as infants gain more experience interacting with the sensory world, their intersensory matching abilities and strategies are modified.

Behavioral studies in pre-adolescent children and adults also suggest an age and experience dependent change in multisensory processing and report a link between performance improvements and optimization of statistical cue weighting. It has been theorized that different sensory inputs are weighted on the basis of their relative reliability when combined within the nervous system (Alais & Burr, 2004; Ernst & Banks, 2002; Helbig & Ernst, 2007; Wozny, Beierholm, & Shams, 2008). Because sensory systems mature at different rates, the relative reliability of the information coded by each system shifts, subsequently altering the weights attributed to respective cues. Interestingly, and germane to the current study, recent work has suggested that this statistical optimization of multisensory integration is not realized until middle childhood (Gori et al., 2008; Nardini et al., 2008). If cue reliability weightings are calculated over a specific temporal interval, then the delayed maturation of the multisensory temporal binding window may interfere with the optimality of this process. Future work that relates the developmental time course of multisensory temporal function and statistical optimality will shed light on this possibility.

While research has suggested that there exists a seemingly extended sensitive period for the acquisition of multisensory based skills, it must be reinforced that multisensory integration is a multifaceted process that likely involves maturation within multiple domains. Thus, in addition to the temporal factors examined in the current study, spatial, effectiveness and semantic factors also contribute to the final integrative product. Each of these domains may mature at different rates, and an analysis of the developmental trajectories for each of these will be extraordinarily informative for both normative and clinical studies. The establishment of maturational milestones in multisensory development could be crucial in predicting certain developmental disabilities that have been associated with abnormal multisensory processing such as such as dyslexia and autism (Hairston, Burdette, Flowers, Wood, & Wallace, 2005; Hari & Renvall, 2001; Laasonen, Service, & Virsu, 2001, 2002; Laasonen, Tomma-Halme, Lahti-Nuuttila, Service, & Virsu, 2000; Lovaas, Schreibman, Koegel, & Rehm, 1971; Mongillo et al., 2008; Smith & Bennetto, 2007; Virsu, Lahti-Nuuttila, & Laasonen, 2003; Williams, Massaro, Peel, Bosseler, & Suddendorf, 2004).

The goal of the current study was to document age-related temporal multisensory processing differences between adults and typically developing 10 and 11 year old children. Future research will expand this to additional age groups with the ultimate goal of creating a developmental trajectory for normative multisensory temporal processing. Identification of these benchmarks and chronology will be of tremendous use in the screening and treatment of these developmental disabilities. For example, recent work has found that perceptual training on a simultaneity judgment task identical to that employed here can narrow the multisensory temporal window in typical adults (Powers et al., 2009). Application of such training methods to impaired populations could hold great promise in the remediation of multisensory deficits, and by extension those higher order processes dependent on the faithful binding of multisensory cues.

Supplementary Material

Acknowledgments

We thank Drs. Linda Hood, Wesley Grantham, Alexandra Key and David Royal for their intellectual contributions as well as Dr. Lynnette Henderson for her assistance with subject recruitment. This work was supported by the Vanderbilt University Kennedy Center and the National Institute for Deafness and Other Communication Disorders (Grant F30 DC009759).

Appendix A. Supplementary data

Supplementary data associated with this article can be found, in the online version, at doi:10.1016/j.neuropsychologia.2010.11.041.

References

- Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Current Biology. 2004;14(3):257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Bahrick LE. Infants’ perception of substance and temporal synchrony in multimodal events. Infant Behavior & Development. 1983;6:429–451. [Google Scholar]

- Bahrick LE. Infants’ intermodal perception of two levels of temporal structure in natural events. Infant Behavior & Development. 1987;10:387–416. [Google Scholar]

- Bahrick LE. Intermodal learning in infancy: Learning on the basis of two kinds of invariant relations in audible and visible events. Child Development. 1988;59(1):197–209. [PubMed] [Google Scholar]

- Barutchu A, Danaher J, Crewther SG, Innes-Brown H, Shivdasani MN, Paolini AG. Audiovisual integration in noise by children and adults. Journal of Experimental Child Psychology. 2010;105(1–2):38–50. doi: 10.1016/j.jecp.2009.08.005. [DOI] [PubMed] [Google Scholar]

- Bushara KO, Grafman J, Hallett M. Neural correlates of auditory–visual stimulus onset asynchrony detection. The Journal of Neuroscience. 2001;21(1):300–304. doi: 10.1523/JNEUROSCI.21-01-00300.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA, Campbell R, Brammer MJ. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Current Biology. 2000;10(11):649–657. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Hansen PC, Iversen SD, Brammer MJ. Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage. 2001;14(2):427–438. doi: 10.1006/nimg.2001.0812. [DOI] [PubMed] [Google Scholar]

- Colonius H, Diederich A. Multisensory interaction in saccadic reaction time: A time-window-of-integration model. Journal of Cognitive Neuroscience. 2004;16(6):1000–1009. doi: 10.1162/0898929041502733. [DOI] [PubMed] [Google Scholar]

- Dhamala M, Assisi CG, Jirsa VK, Steinberg FL, Kelso JA. Multisensory integration for timing engages different brain networks. Neuroimage. 2007;34(2):764–773. doi: 10.1016/j.neuroimage.2006.07.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon NF, Spitz L. The detection of auditory visual desynchrony. Perception. 1980;9(6):719–721. doi: 10.1068/p090719. [DOI] [PubMed] [Google Scholar]

- Ernst MO. Multisensory integration: A late bloomer. Current Biology. 2008;18(12):R519–R521. doi: 10.1016/j.cub.2008.05.002. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415(6870):429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Frens MA, Van Opstal AJ, Van der Willigen RF. Spatial and temporal factors determine auditory–visual interactions in human saccadic eye movements. Perception and Psychophysics. 1995;57(6):802–816. doi: 10.3758/bf03206796. [DOI] [PubMed] [Google Scholar]

- Fujisaki W, Shimojo S, Kashino M, Nishida S. Recalibration of audiovisual simultaneity. Nature Neuroscience. 2004;7(7):773–778. doi: 10.1038/nn1268. [DOI] [PubMed] [Google Scholar]

- Giedd JN, Blumenthal J, Jeffries NO, Castellanos FX, Liu H, Zijdenbos A, et al. Brain development during childhood and adolescence: A longitudinal MRI study. Nature Neuroscience. 1999;2(10):861–863. doi: 10.1038/13158. [DOI] [PubMed] [Google Scholar]

- Gogtay N, Giedd JN, Lusk L, Hayashi KM, Greenstein D, Vaituzis AC, et al. Dynamic mapping of human cortical development during childhood through early adulthood. Proceedings of the National Academy of Sciences of the United States of America. 2004;101(21):8174–8179. doi: 10.1073/pnas.0402680101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gori M, Del Viva M, Sandini G, Burr DC. Young children do not integrate visual and haptic form information. Current Biology. 2008;18(9):694–698. doi: 10.1016/j.cub.2008.04.036. [DOI] [PubMed] [Google Scholar]

- Grant KW, Seitz PF. The use of visible speech cues for improving auditory detection of spoken sentences. The Journal of the Acoustical Society of America. 2000;108(3 Pt 1):1197–1208. doi: 10.1121/1.1288668. [DOI] [PubMed] [Google Scholar]

- Hairston WD, Burdette JH, Flowers DL, Wood FB, Wallace MT. Altered temporal profile of visual–auditory multisensory interactions in dyslexia. Experimental Brain Research. 2005;166(3–4):474–480. doi: 10.1007/s00221-005-2387-6. [DOI] [PubMed] [Google Scholar]

- Hari R, Renvall H. Impaired processing of rapid stimulus sequences in dyslexia. Trends in Cognitive Sciences. 2001;5(12):525–532. doi: 10.1016/s1364-6613(00)01801-5. [DOI] [PubMed] [Google Scholar]

- Helbig HB, Ernst MO. Optimal integration of shape information from vision and touch. Experimental Brain Research. 2007;179(4):595–606. doi: 10.1007/s00221-006-0814-y. [DOI] [PubMed] [Google Scholar]

- Hughes HC, Reuter-Lorenz PA, Nozawa G, Fendrich R. Visual–auditory interactions in sensorimotor processing: Saccades versus manual responses. Journal of Experimental Psychology: Human Perception and Performance. 1994;20(1):131–153. doi: 10.1037//0096-1523.20.1.131. [DOI] [PubMed] [Google Scholar]

- Koppen C, Spence C. Audiovisual asynchrony modulates the Colavita visual dominance effect. Brain Research. 2007;1186:224–232. doi: 10.1016/j.brainres.2007.09.076. [DOI] [PubMed] [Google Scholar]

- Laasonen M, Service E, Virsu V. Temporal order and processing acuity of visual, auditory, and tactile perception in developmentally dyslexic young adults. Cognitive, Affective & Behavioral Neuroscience. 2001;1(4):394–410. doi: 10.3758/cabn.1.4.394. [DOI] [PubMed] [Google Scholar]

- Laasonen M, Service E, Virsu V. Crossmodal temporal order and processing acuity in developmentally dyslexic young adults. Brain and Language. 2002;80(3):340–354. doi: 10.1006/brln.2001.2593. [DOI] [PubMed] [Google Scholar]

- Laasonen M, Tomma-Halme J, Lahti-Nuuttila P, Service E, Virsu V. Rate of information segregation in developmentally dyslexic children. Brain and Language. 2000;75(1):66–81. doi: 10.1006/brln.2000.2326. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Developmental changes in infants’ bisensory response to synchronous durations. Infant Behavior & Development. 1986;9(3):335–353. [Google Scholar]

- Lewkowicz DJ. Infants’ response to temporally based intersensory equivalence: The effect of synchronous sounds on visual preferences for moving stimuli. Infant Behavior & Development. 1992;15(3):297–324. [Google Scholar]

- Lewkowicz DJ. Perception of auditory–visual temporal synchrony in human infants. Journal of Experimental Psychology: Human Perception & Performance. 1996;22(5):1094–1106. doi: 10.1037//0096-1523.22.5.1094. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Infants’ perception of the audible, visible and bimodal attributes of multimodal syllables. Child Development. 2000;71(5):1241–1257. doi: 10.1111/1467-8624.00226. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Lickliter R, editors. The development of intersensory perception: Comparative perspectives. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc; 1994. [Google Scholar]

- Lovaas OI, Schreibman L, Koegel R, Rehm R. Selective responding by autistic children to multiple sensory input. Journal of Abnormal Psychology. 1971;77(3):211–222. doi: 10.1037/h0031015. [DOI] [PubMed] [Google Scholar]

- Lovelace CT, Stein BE, Wallace MT. An irrelevant light enhances auditory detection in humans: A psychophysical analysis of multisensory integration in stimulus detection. Brain Research Cognitive Brain Research. 2003;17(2):447–453. doi: 10.1016/s0926-6410(03)00160-5. [DOI] [PubMed] [Google Scholar]

- Massaro DW. Children’s perception of visual and auditory speech. Child Development. 1984;55(5):1777–1788. [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264(5588):746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Mongillo EA, Irwin JR, Whalen DH, Klaiman C, Carter AS, Schultz RT. Audiovisual processing in children with and without autism spectrum disorders. Journal of Autism and Developmental Disorders. 2008;38(7):1349–1358. doi: 10.1007/s10803-007-0521-y. [DOI] [PubMed] [Google Scholar]

- Munhall KG, Gribble P, Sacco L, Ward M. Temporal constraints on the McGurk effect. Perception and Psychophysics. 1996;58(3):351–362. doi: 10.3758/bf03206811. [DOI] [PubMed] [Google Scholar]

- Nardini M, Jones P, Bedford R, Braddick O. Development of cue integration in human navigation. Current Biology. 2008;18(9):689–693. doi: 10.1016/j.cub.2008.04.021. [DOI] [PubMed] [Google Scholar]

- Noesselt T, Rieger JW, Schoenfeld MA, Kanowski M, Hinrichs H, Heinze HJ, et al. Audiovisual temporal correspondence modulates human multisensory superior temporal sulcus plus primary sensory cortices. The Journal of Neuroscience. 2007;27(42):11431–11441. doi: 10.1523/JNEUROSCI.2252-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfefferbaum A, Mathalon DH, Sullivan EV, Rawles JM, Zipursky RB, Lim KO. A quantitative magnetic resonance imaging study of changes in brain morphology from infancy to late adulthood. Archives of Neurology. 1994;51(9):874–887. doi: 10.1001/archneur.1994.00540210046012. [DOI] [PubMed] [Google Scholar]

- Powers AR, Hevey M, Wallace MT. Neural correlates of multisensory perceptual training. (Unpublished results) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers AR, 3rd, Hillock AR, Wallace MT. Perceptual training narrows the temporal window of multisensory binding. The Journal of Neuroscience. 2009;29(39):12265–12274. doi: 10.1523/JNEUROSCI.3501-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider TR, Debener S, Oostenveld R, Engel AK. Enhanced EEG gamma-band activity reflects multisensory semantic matching in visual-to-auditory object priming. Neuroimage. 2008;42(3):1244–1254. doi: 10.1016/j.neuroimage.2008.05.033. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Schneider TR, Tandler F, Engel AK. Gamma-band activity reflects multisensory matching in working memory. Experimental Brain Research. 2009;198(2–3):363–372. doi: 10.1007/s00221-009-1835-0. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Talsma D, Grigutsch M, Herrmann CS, Woldorff MG. Good times for multisensory integration: Effects of the precision of temporal synchrony as revealed by gamma-band oscillations. Neuropsychologia. 2007;45(3):561–571. doi: 10.1016/j.neuropsychologia.2006.01.013. [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Illusions. What you see is what you hear. Nature. 2000;408(6814):788. doi: 10.1038/35048669. [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Visual illusion induced by sound. Brain Research. Cognitive Brain Research. 2002;14(1):147–152. doi: 10.1016/s0926-6410(02)00069-1. [DOI] [PubMed] [Google Scholar]

- Smith EG, Bennetto L. Audiovisual speech integration and lipreading in autism. Journal of Child Psychology and Psychiatry and Allied Disciplines. 2007;48(8):813–821. doi: 10.1111/j.1469-7610.2007.01766.x. [DOI] [PubMed] [Google Scholar]

- Spelke ES. Perceiving bimodally specified events in infancy. Developmental Psychology. 1979;15:626–636. [Google Scholar]

- Sumby WH, Pollack I. Visual contributions to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Tremblay C, Champoux F, Voss P, Bacon BA, Lepore F, Theoret H. Speech and non-speech audio-visual illusions: A developmental study. PLoS One. 2007;2(1):e742. doi: 10.1371/journal.pone.0000742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Wassenhove V, Grant KW, Poeppel D. Visual speech speeds up the neural processing of auditory speech. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(4):1181–1186. doi: 10.1073/pnas.0408949102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Wassenhove V, Grant KW, Poeppel D. Temporal window of integration in auditory–visual speech perception. Neuropsychologia. 2007;45(3):598–607. doi: 10.1016/j.neuropsychologia.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Virsu V, Lahti-Nuuttila P, Laasonen M. Crossmodal temporal processing acuity impairment aggravates with age in developmental dyslexia. Neuroscience Letters. 2003;336(3):151–154. doi: 10.1016/s0304-3940(02)01253-3. [DOI] [PubMed] [Google Scholar]

- Williams JH, Massaro DW, Peel NJ, Bosseler A, Suddendorf T. Visual–auditory integration during speech imitation in autism. Research in Developmental Disabilities. 2004;25(6):559–575. doi: 10.1016/j.ridd.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Wozny DR, Beierholm UR, Shams L. Human trimodal perception follows optimal statistical inference. Journal of Vision. 2008;8(3):24, 21–11. doi: 10.1167/8.3.24. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.