Abstract

This study examined the influence of stimulus variability and lexical difficulty on the speech perception performance of adults who used either multichannel cochlear implants or conventional hearing aids. The effects of stimulus variability were examined by comparing word identification in single-talker versus multiple-talker conditions. Lexical effects were assessed by comparing recognition of “easy” words (ie, words that occur frequently and have few phonemically similar words, or neighbors) with “hard” words (ie, words with the opposite lexical characteristics). Word recognition performance was assessed in either closed* or open-set response formats. The results demonstrated that both stimulus variability and lexical difficulty influenced word recognition performance. Identification scores were poorer in the multiple-talker than in the single-talker conditions. Also, scores for lexically “easy” items were better than those for “hard” items. The effects of stimulus variability were not evident when a closed-set response format was employed.

INTRODUCTION

Human listeners typically display a great deal of “perceptual robustness”. They are able to perceive and understand spoken language very accurately over a wide range of conditions that modify the acoustic-phonetic properties of the signal. Among these conditions are differences due to talkers, speaking rates, dialects, speaking styles, and alternative pronunciations, as well as several kinds of signal degradations. Previous research with listeners with normal hearing suggests that the nervous system preserves a great deal of the fine acoustic-phonetic detail present in the speech signal. Indexical properties of speech, including talker-specific attributes, are not lost or filtered out during perceptual analysis. Instead, these attributes are encoded by listeners with normal hearing, and become part of a very rich and elaborate representational system in long-term memory.1

In listeners with normal hearing, introducing stimulus variability in the acoustic signal (eg, by varying the number of talkers) causes word identification accuracy to decrease and response latency to increase.2 Introducing variability by varying the lexical properties of words, such as their frequency of occurrence in the language, or their phonetic similarity to other words in memory, influences spoken word recognition in a similar fashion. One measure of lexical similarity is the number of “lexical neighbors” or words that differ by one phoneme from the target word. “Easy” words (high-frequency words from sparse neighborhoods) are recognized faster and with greater accuracy than “hard” words (low-frequency words from dense neighborhoods).3 At present, very little is known about the effect of stimulus variability on spoken word recognition by listeners with hearing impairment, in part because their perceptual abilities are often assessed with tests that were specifically designed to minimize variability, such as the NU-6.4 These tests consist of phonetically balanced monosyllabic words spoken by a single talker at a single speaking rate; stimulus items are not balanced for lexical difficulty. Including different sources of variability in measures of word recognition might provide insight into the underlying speech perception processes employed by listeners with hearing impairment.

EXPERIMENT 1

In the first experiment, we examined speech perception under conditions of stimulus and lexical variability in adults with a mild-to-moderate hearing loss. We also compared their performance on these conditions with their performance on a more traditional test of word recognition.

Subjects

The subjects were 12 adults between the ages of 18 and 65 years who had a mild-to-moderate bilateral senso-rineural hearing loss, with speech discrimination scores of at least 50% words correct on a recorded version of the NU-6 lists. All subjects were current hearing aid (HA) users, and had a minimum of 3 months' experience with their sensory aids. Six of the subjects used bilateral amplification, and 6 wore an HA in only one ear. The subjects' mean age was 55.3 years.

Stimulus Materials

Both recorded monosyllabic word and sentence materials were employed. The monosyllabic word lists contained words selected from the Modified Rhyme Test (MRT).5 From a digital database containing 6,000 recorded monosyllabic words (300 MRT words recorded by 20 talkers), a subset of 100 words containing 50 “easy” and 50 “hard” words was selected by means of lexical statistics obtained from a computerized version of Webster's Pocket Dictionary.2 The single-talker condition contained 50 tokens produced by the same male talker, and the multiple-talker condition contained 50 tokens selected from 5 male and 5 female talkers. Half of the tokens in each condition were lexically “easy” and half were “hard”. The sentence materials were selected from a computerized database containing 100 Harvard sentences produced by 10 male and 10 female talkers.6 Each sentence contained 5 key words to be identified. A male talker was selected for the single-talker condition. Both the single-and multiple-talker conditions contained 48 sentences.

Procedures

With the subjects seated inside a sound-treated room, the stimuli were presented via the free field at approximately 70 dB sound pressure level, a level approximating that of loud conversational speech. Subjects wore their personal amplification during testing. Subjects repeated the word they heard, and the experimenter transcribed the response. To be counted as correct, a response had to be identical to the target word. For example, the plural form of a singular target was counted as incorrect. The sentence stimuli were scored by the percentage of key words correctly identified.

Results

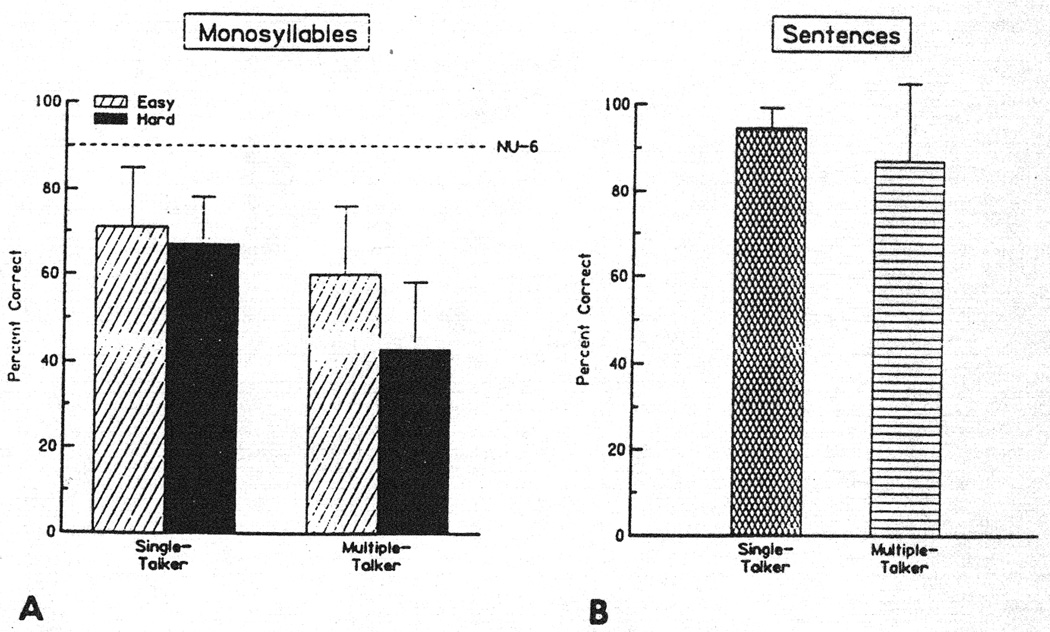

Figure 1A presents the mean percentage of “easy” and “hard” monosyllabic words correctly identified in the single-talker and multiple-talker test conditions, along with their average performance on the NU-6. Performance was better on the NU-6 than on the single- and multiple-talker conditions containing lexical variability. We used t tests to examine talker and lexical effects on word recognition. The HA subjects identified words with significantly greater accuracy in the single-talker condition than in the multiple-talker condition (p < .0005), and “easy” words were identified with significantly greater accuracy than “hard” words (p < .04). Figure 1B presents the results for the sentence materials. As expected, the subjects' word recognition scores improved when sentences were presented. Figure 1B illustrates that word recognition declined significantly when multiple talkers were introduced (p < .01), despite the presence of syntactic and semantic cues to word identity that were available in the sentence stimuli. Thus, the effects of stimulus variability appear to be relatively robust for both isolated words and sentences.

Fig. 1.

Hearing aid subjects' word recognition performance in two talker conditions for A) word and B) sentence stimuli. Percentage of monosyllabic words correctly identified as function of lexical difficulty also is presented (A). Error bars represent 1 SD above mean. Maximum performance = 100%.

Discussion

Using traditional clinical criteria based on NU-6 scores, these adult listeners with mild-to-moderate hearing loss would be considered to have “good” spoken word recognition skills. However, when word recognition performance was assessed under more demanding perceptual conditions containing several sources of stimulus variability, their performance declined. These listeners not only had lower word recognition scores in the multiple-talker condition compared to the single-talker condition, but they also had more difficulty making fine acoustic-phonetic discriminations among lexically difficult words in the multiple-talker conditions. That is, they identified “easy” words with greater accuracy than “hard” words in the multiple-talker condition. This finding suggests that although listeners with mild-to-moderate hearing impairment may have reduced frequency selectiveity and audibility and have difficulty making fine phonetic distinctions, they do recognize words “relationally”. That is, they perceive words in the context of other phonetically similar words, just as normal listeners do.

EXPERIMENT 2

In this second experiment we investigated the effects of stimulus variability on spoken word recognition by adult multichannel cochlear implant (CI) users, using both isolated words and sentence stimuli. In addition, the effects of stimulus variability on monosyllabic word recognition were examined in both an open- and closed-set response format.

Subjects

All subjects were 18 years or older, with a bilateral profound hearing loss. These subjects received a CI because they derived no benefit from conventional HAs. Subject selection criteria included a minimum of 6 months of CI use, and NU-6 scores ≥10% words correct. Nineteen CI users were initially tested as part of this investigation. Eleven CI subjects met the inclusion criteria; 10 used a Nucleus CI and 1 used a Clarion CI. Their mean age was approximately 49 years, and their mean length of device use was 4.3 years.

Procedures

The stimulus materials and procedures used to assess open-set speech recognition were the same as those in experiment 1, except that the CI subjects used their CI rather than an HA during testing. In addition, the CI subjects were administered a closed-set test of word identification, the Word Intelligibility by Picture Identification (WIPI).7 The WIPI consists of four different lists of 25 monosyllabic words. Subjects respond by selecting the item they heard from among a six-picture matrix. For the present study, the subjects were presented with two different recorded lists: one produced by a male talker, and one in which the items were produced by 5 male and 5 female talkers. Only 8 of the CI subjects were administered the Harvard sentences and the WIPI because of time constraints.

Results and Discussion

Figure 2A presents the mean percentage of words correctly identified for the open-set single- and multiple-talker conditions, along with the average performance on the NU-6. These CI subjects performed better on the NU-6 than on new stimulus lists containing talker or lexical variability. Talker and lexical effects were examined with t tests. Their performance was significantly better on the single-talker than on the multiple-talker conditions (p < .05). Although the CI subjects' word recognition scores were higher for lexically “easy” than for “hard” words, this trend was not significant, perhaps because of variability among individual subjects. Figure 2B presents the results for the sentence stimuli. The CI subjects' word recognition performance improved when sentence stimuli were presented. Their performance declined in the multiple-talker condition compared to the single-talker condition, but again, these differences were not significant.

Fig. 2.

Cochlear implant subjects' word recognition performance in two talker conditions for A) word and B) sentence stimuli. Percentage of monosyllabic words correctly identified as function of lexical difficulty also is presented (A). Error bars represent 1 SD above mean.

The Table presents a comparison of the CI subjects' recognition of isolated words as a function of talker condition and response format. As expected, these subjects' word recognition performance was better when a closed-set response format was employed. However, the Table shows that talker variability did not influence word recognition performance when the set of potential responses was greatly constrained through the use of a closed-set task.

Table.

PERCENTAGE OF WORDS CORRECTLY IDENTIFIED BY COCHLEAR IMPLANT SUBJECTS AS FUNCTION OF TALKER CONDITION AND RESPONSE MODE

| Open-Set Response (N=11) |

Closed-Set Response (N=8) |

|||

|---|---|---|---|---|

| Single Talker |

Multiple Talker |

Single Talker |

Multiple Talker |

|

| Mean SD |

15.9% 8.2% |

9.14% 10.9% |

43.7% 27.9% |

43.6% 29.2% |

GENERAL DISCUSSION

The results suggest that listeners with sensory aids employ underlying perceptual processes that are similar to those of listeners with normal hearing. When spoken word recognition was assessed under more demanding perceptual conditions containing stimulus variability, recognition performance generally declined. For HA subjects, performance was poorer in the multiple- than in the single-talker condition for both the monosyllabic and sentence stimuli. For CI subjects, significant talker effects were noted for monosyllabic word recognition. The present results suggest that the effect of talker variability is relatively robust when open-set stimuli are employed. However, when other structural cues to word recognition were present, as in the closed-set response mode, the effect of talker variability was eliminated. Last, the finding that lexically “easy” words were identified with greater accuracy than lexically “hard” words by the HA subjects suggests that listeners with hearing impairment may perceive words in the context of other phonemically similar words, just as listeners with normal hearing do. In summary, the present results suggest that stimulus variability is important in speech perception by persons with hearing impairment who demonstrate at least some open-set speech perception. It appears that these listeners with sensory aids (either HA or CI) encode instance-specific information that is present in the speech signal. Finally, the present results suggest that low-variability tests of speech recognition may not adequately assess a listener's underlying perceptual capabilities when they have to recognize speech in the presence of stimulus variability, such as might be encountered in natural communication situations, when there is noise, speech input from many different talkers, or other sources of signal degradation.

ACKNOWLEDGMENTS

The authors gratefully acknowledge toe assistance of Luis Hernandez and John Kari of the Speech Research Laboratory, Indiana University, Bloomington, in stimulus preparation. We alto thank Susan Todd. Allyson Riley, David Ertmer. and Terri Kerr for their help with data collection and analysis.

This work was supported by the National Institutes of Health/National Institute on Deafness and Other Communication Disorders (grants DC00064, DC00111. and T32 DC00012) and by the American Speech-Language-Hearing Foundation.

Contributor Information

K. I. Kirk, Department of Ololaryngoiogy-Head and Neck Surgery, Indiana University School of Medicine. Indianapolis, Indiana.

D. B. Pisoni, Speech Research Laboratory, Department of Psychology. Indiana University, Bloomington, Indiana.

M. S. Sommers, Department of Psychology, Washington University. St Louis. Missouri.

M. Young, Department of Ololaryngoiogy-Head and Neck Surgery, Indiana University School of Medicine. Indianapolis, Indiana.

C. Evanson, Department of Ololaryngoiogy-Head and Neck Surgery, Indiana University School of Medicine. Indianapolis, Indiana.

REFERENCES

- 1.Pisoni DB. Long-term memory in speech perception. Some new findings on talker variability, speaking rate, and perceptual learning. Speech Communication. 1993;13:109–125. doi: 10.1016/0167-6393(93)90063-q. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pisoni DB. Some comments on invariance. variability and perceptual normalization in speech perception. Proceedings 1992 International Conference on Spoken Language Processing; Banff, Canada. 1992. pp. 587–590. [Google Scholar]

- 3.Luce PA. Neighborhoods of words in the mental lexicon. Research Speech Perception Technical Report No 6. Bloomington, Ind.: Indiana University; 1986. [Google Scholar]

- 4.Tillman TW, Carhart R. An expanded test for speech discrimination utilizing CNC monosyllabic words. Northwestern University Auditory Test No 6 Tech Rep No SAM-TR-66-55. Brooks Air Force Base, Tex: USAF School of Aerospace Medicine; 1966. [DOI] [PubMed] [Google Scholar]

- 5.House AS, Williams CE, Hecker MHL, Kryter KD. Articulation-testing methods: consonantal differentiation with a closed-response set. J Acoust Soc Am. 1965;35:158–166. doi: 10.1121/1.1909295. [DOI] [PubMed] [Google Scholar]

- 6.Karl JR, Pisoni DB. The role of talker-specific information in memory for spoken sentences [Abstract] J Acoust Soc Am. 1994;95:2873. [Google Scholar]

- 7.Ross M, Lerman J. A picture identification test for hearing impaired children. J Speech Hear Res. 1970;13:44–53. doi: 10.1044/jshr.1301.44. [DOI] [PubMed] [Google Scholar]