Abstract

Cochlear implants allow many individuals with profound hearing loss to understand spoken language, even though the impoverished signals provided by these devices poorly preserve acoustic attributes long believed to support recovery of phonetic structure. Consequently questions may be raised regarding whether traditional psycholinguistic theories rely too heavily on phonetic segments to explain linguistic processing while ignoring potential roles of other forms of acoustic structure. This study tested that possibility. Adults and children (8 years old) performed two tasks: one involving explicit segmentation, phonemic awareness, and one involving a linguistic task thought to operate more efficiently with well-defined phonetic segments, short-term memory. Stimuli were unprocessed signals (UP), amplitude envelopes (AE) analogous to implant signals, and unprocessed signals in noise (NOI) which provided a degraded signal for comparison. Adults’ results for short-term recall were similar for UP and NOI, but worse for AE stimuli. The phonemic awareness task revealed the opposite pattern across AE and NOI. Children’s results for short-term recall showed similar decrements in performance for AE and NOI compared to UP, even though only NOI stimuli showed diminished results for segmentation. Conclusions were that perhaps traditional accounts are too focused on phonetic segments, something implant designers and clinicians need to consider.

For much of the history of speech perception research, the traditional view has been that listeners automatically recover phonetic segments from the acoustic signal, and use those segments for subsequent linguistic processing. According to this view, listeners collect from the speech signal temporally brief and spectrally distinct bits, called acoustic cues; in turn, those bits specify the consonants and vowels comprising the linguistic message that was heard (e.g., Cooper, Liberman, Harris & Grubb, 1958; Stevens, 1972; 1980). This prevailing viewpoint spawned decades of research investigating which cues, and precisely which settings of those cues, define each phonetic category (Raphael, 2008). That recovered phonetic structure then functions as the key to other sorts of linguistic processing, according to traditional accounts (e.g., Chomsky & Halle, 1968; Ganong, 1980; Luce & Pisoni, 1998; Marslen-Wilson & Welsh, 1978; McClelland & Elman, 1986; Morton, 1969). For example, the storage and retrieval of sequences of words or digits in a short-term memory buffer are believed to rely explicitly on listeners’ abilities to use a phonetic code in that storage and retrieval, a conclusion supported by the finding that recall is poorer for phonologically similar than for dissimilar materials (e.g., Baddeley, 1966; Conrad & Hull, 1964; Nittrouer & Miller, 1999; Salame & Baddeley, 1986).

As compelling as these traditional accounts are, however, other evidence has shown that forms of acoustic structure in the speech signal not fitting the classic definition of acoustic cues affects linguistic processing, and do so in ways that do not necessitate the positing of a stage of phonetic recovery. In particular, talker-specific attributes of the speech signal can influence short-term storage and recall of linguistic materials. To this point, Palmeri, Goldinger and Pisoni (1993) presented strings of words to listeners, and asked those listeners to indicate whether specific words towards the ends were newly occurring or repetitions of ones heard earlier. Results showed that decision accuracy improved when the repetition was spoken by the person who produced the word originally, indicating that information about the speaker’s voice was stored, separately but along with phonetic information, and helped to render each item more distinct.

Additional evidence that talker-specific information modulates speech perception is found in the time it takes to make a phonetic decision. Mullennix and Pisoni (1990) showed that this time is slowed when tokens are produced by multiple speakers, rather than a single speaker, indicating that some effort goes into processing talker-specific information and such processing is independent of that involved in recovery of phonetic structure. Thus, regardless of whether its function is characterized as facilitative or as inhibitory for a given task, listeners apparently attend to structure related to talker identity in various psycholinguistic processes. Information regarding talker identity is available in the temporal fine structure of speech signals, structure that arises from the individual opening and closing cycles of the vocal folds and appears in spectrograms as vertical striations across the x axis.

Speech perception through cochlear implants

One challenge to the traditional view of speech perception and linguistic processing described above arose when clinical findings began to show that listeners with severe-to-profound hearing loss who receive cochlear implants are often able to recognize speech better than might be expected, if those views were strictly accurate. Some adult implant users are able to recognize close to 100% of words in sentences correctly (Firszt et al., 2004). The mystery of how those implant users manage to recognize speech as well as they do through their devices arises because the signal processing algorithms currently used in implants are poor at preserving the kinds of spectral and temporal structure in the acoustic signal that could be described as either acoustic cues or as temporal fine structure. Yet many deaf adults who choose to get cochlear implants can understand speech with nothing more than the signals they receive through those implants. The broad goal of the two experiments reported here was to advance our understanding of the roles played in linguistic processing of these kinds of signal structure, and in so doing, better appreciate speech perception through cochlear implants.

The primary kind of structure preserved by cochlear implants is amplitude change across time for a bank of spectral bands, something termed ‘temporal’ or ‘amplitude’ envelopes. The latter term is used in this report. In this signal processing, any kind of frequency-specific structure within each band is effectively lost. In particular, changes in formant frequencies near syllable margins where consonantal constrictions and open vowels intersect are lost, except when they are extensive enough to cross filtering bands. Those formant transitions constitute important acoustic cues for many phonetic decisions. Other sorts of cues, such as release bursts and spectral shapes of fricative noises, are diminished in proportion to the decrement in numbers of available channels. In all cases, temporal fine structure is eliminated. Nonetheless, degradation in speech recognition associated with cochlear implants is usually attributed to the reduction of acoustic cues, rather than to the loss of temporal fine structure, because fine structure has historically been seen as more robustly related to music than to speech perception – at least for non-tonal languages like English (Kong, Cruz, Jones & Zheng, 2004; Smith, Delgutte & Oxenham, 2002; Xu & Pfingst, 2003). However, the role of temporal fine structure in linguistic processing beyond recognition has not been thoroughly examined. Whether clinicians realize it or not, standard practice is currently based on the traditional assumptions outlined at the start of this report: If implant patients can recognize word-internal phonetic structure, the rest of their language processing must be normal. This study tested that assumption.

Signal processing and research goals

For research purposes, speech-related amplitude envelopes are typically derived by dividing the speech spectrum into a number of channels, and half-wave rectifying those channels to recover amplitude structure across time. The envelopes resulting from that process are used to modulate bands of white noise, which lack frequency structure, and presented to listeners with normal hearing. That method, usually described with the generic term ‘vocoding,’ was used in this study to examine questions regarding the effects of reduced acoustic cues and elimination of temporal fine structure on linguistic processing. For comparison, natural speech signals not processed in any way were presented in noise. This was done to have a control condition with diminishment in acoustic cues due to energetic masking, but which preserved temporal fine structure. Thus, the two conditions of signal degradation both diminished available acoustic cues, but while one eliminated temporal fine structure as well, the other preserved it. The goal here was to compare outcomes across signal types in order to shed light on the extent to which disruptions in linguistic processing associated with amplitude envelopes are attributable to deficits in the availability of phonetically relevant acoustic cues or to the loss of temporal fine structure.

The current study was not concerned with how well listeners can recognize speech from amplitude envelopes. Numerous experiments have tackled that question, and have collectively shown that listeners with normal hearing can recognize syllables, words, and sentences rather well with only four to eight channels (e.g., Eisenberg, Shannon, Schaefer Martinez, Wygonski & Boothroyd, 2000; Loizou, Dorman & Tu, 1999; Nittrouer & Lowenstein, 2010; Nittrouer, Lowenstein & Packer, 2009; Shannon, Zeng, Kamath, Wygonski & Ekelind, 1995). The two experiments reported here instead focused on the effects of this signal processing on linguistic processing beyond recognition – specifically on short-term memory – and the relationship of that functioning to listeners’ abilities to recover phonetic structure with these signals. A pertinent issue addressed by this work was how necessary it is for listeners to recover explicitly phonetic structure from speech signals in order to store and retrieve items in a short-term memory buffer. If either of the signal processing algorithms implemented in this study were found to hinder short-term memory, the effect could alternatively be by harming listeners’ abilities specifically to recover phonetic structure or by impairing perceptual processing through signal degradation. Outcomes of this study should provide general information about normal psycholinguistic processes and about how signal processing for implants might best be designed to facilitate language functioning in the real world where more than word recognition is required.

Speech perception by children

Finally, the effects on linguistic processing of diminishment in acoustic cues and temporal fine structure were examined for both adults and children in these experiments because it would be important to know if linguistic processing through a cochlear implant might differ depending on listener age. In speech perception, children rely on (i.e., weight) components of the signal differently than adults do, so the possibility existed that children might be differently affected by the reduction of certain acoustic cues. In particular, children rely strongly on intrasyllabic formant transitions for phonetic judgments (e.g., Greenlee, 1980; Nittrouer 1992; Nittrouer & Miller 1997a, 1997b; Nittrouer & Studdert-Kennedy, 1987; Wardrip-Fruin & Peach, 1984). These spectral structures are greatly reduced in amplitude envelope replicas of speech, but are rather well preserved when speech is embedded in noise. Cues such as release bursts and fricative noises are likely to be masked by noise, but are preserved to some extent in amplitude envelopes. However, children do not weight these brief, spectrally static cues as strongly as adults do (e.g., Nittrouer, 1992; Nittrouer & Miller 1997a, 1997b; Nittrouer & Studdert-Kennedy, 1987; Parnell & Amerman, 1978). Consequently, children might be more negatively affected when listening to amplitude-envelope speech than adults are. Of course, it could be the case that children are simply more deleteriously affected by any kind of signal degradation than adults. This would happen, for example, if signal degradation is more likely to create informational masking (i.e., cognitive or perceptual loads) for inexperienced listeners. Comparison of outcomes for amplitude envelopes and noise-embedded signals could help explicate the source of age-related differences in those outcomes, if observed.

On the other hand, there are several developmental models that might actually lead to the prediction that children should attend more than adults to the broad kinds of structure preserved by amplitude envelopes (e.g., Davis & MacNeilage, 1990; Menn, 1978; Nittrouer, 2002; Waterson, 1971). For example, one study showed that infants reproduce the global, long-term spectral structure typical of speech signals in their native language before they produce the specific consonants and vowels of that language (Boysson-Bardies, Sagart, Halle & Durand, 1986). Therefore, it might be predicted that children would not be as severely hindered as adults by having only the global structure represented in amplitude envelopes to use in these linguistic processes because that is precisely the kind of structure that children mostly utilize anyway. This situation might especially be predicted to occur if the locus of any observed negative effect on short-term memory for amplitude envelopes was found to reside in listeners’ abilities to recover explicitly phonetic structure. Acquiring sensitivity to that structure requires a protracted developmental period (e.g., Liberman, Shankweiler, Fischer & Carter, 1974). Accordingly, it has been observed that children do not seem to code items in short-term memory using phonetic codes to the same extent that adults do (Nittrouer & Miller, 1999).

Summary

The current study differed from earlier ones examining speech recognition for amplitude envelopes in that recognition itself would not be examined. Rather, the abilities of listeners to perform a psycholinguistic function using amplitude-envelope speech that they could readily recognize were examined, and compared to their performance for speech in noise. Performance on that linguistic processing task was then compared to performance on a task requiring explicit awareness of phonetic units. The question asked was whether a lack of acoustic cues and/or temporal fine structure had effects on linguistic processing independent of phonetic recovery. Accuracy in performance on the short-term memory and phonemic awareness tasks was the principal dependent measure used to answer this question. However, it was also considered possible that even if no decrements in accuracy were found for one or both processed signals there might be an additional perceptual load, leading to enhanced effort, involved in using these impoverished signals for such processes. As an index of effort, response time was measured. This has been shown to be a valid indicator of such effort (e.g., Cooper-Martin, 1994; Piolat, Olive & Kellogg, 2005). If results showed that greater effort is required for linguistic processing with these signals it would mean that perceptual efficiency is diminished when listeners must function with such signals.

In summary, the purpose of this study was to examine whether there is a toll in accuracy and/or efficiency of linguistic processing when signals lacking acoustic cues and/or temporal fine structure are presented. Adults and children were tested to determine if they are differently affected by these disruptions in signal structure. Simultaneously the question was investigated of whether listeners need to recover explicitly phonetic structure from the speech signal in order to perform higher order linguistic processes, such as storing and retrieving items in a short-term memory buffer.

EXPERIMENT 1: SHORT-TERM MEMORY

Listeners’ abilities to store acoustic signals in a short-term memory buffer are facilitated when speech rather than non-speech signals are presented (e.g., Greene & Samuel, 1986; Rowe & Rowe, 1976). That advantage for speech has long been attributed to listeners’ use of phonetic codes for storing items in the short-term (or working) memory buffer (e.g., Baddeley, 1966; Baddeley & Hitch, 1974; Campbell & Dodd, 1980; Spoehr & Corin, 1978). Especially strong support for this position derives from studies revealing that typical listeners are able to recall strings of words more accurately when those words are non-rhyming rather than rhyming (e.g., Mann & Liberman, 1984; Nittrouer & Miller, 1999; Shankweiler, Liberman, Mark, Fowler & Fischer, 1979; Spring & Perry, 1983). Because non-rhyming words are more phonetically distinct than rhyming words, the finding that recall is more accurate for non-rhyming words suggests that phonetic structure must account for the superior recall of speech over non-speech signals. The goal of this first experiment was to examine the abilities of adults and children to store words in a short-term memory buffer when those words are either amplitude envelopes or embedded in noise, two kinds of speech signals that should not be as phonetically distinct as natural speech, in this case due to the impoverished nature of the signals rather than to similarity in phonetic structure. Then, by comparing outcomes of this experiment to results of the second experiment, which investigated listeners’ abilities to recover phonetic structure from those signals, an assessment could be made regarding whether it was particularly the availability of phonetic structure that explained outcomes of this first experiment. The hypothesis was that short-term recall would be better for those signals that provided better access to phonetic structure.

The numbers of channels used to vocode the signals as well as the signal-to-noise ratios used were selected to be minimally sufficient to support reliable word recognition after training. These processing levels meant that listeners could recognize the words, but restricted the availability of acoustic cues in the signal as much as possible. Earlier studies using either amplitude envelopes or speech embedded in noise conducted with adults and children (Eisenberg et al., 2000; Nittrouer et al., 2009; Nittrouer & Boothroyd, 1990) provided initial estimates of the numbers of channels and the signal-to-noise ratio(s) that should be used. Informal pilot testing helped to verify that the levels selected met the stated goals.

Environmental sounds were also used in the current experiment. Short-term recall for environmental sounds was viewed as a sort of anchor, designating the performance that would be expected when phonetic structure was completely inaccessible.

The recall task used in this first experiment was order recall, rather than item recall. In an order recall task listeners are familiarized with the list items before testing. In this experiment that design served an important function by ensuring that all listeners could recognize the items being used, in spite of being either amplitude envelopes or embedded in noise.

Finally, response times were measured and used to index perceptual load. Even if recall accuracy was found to be similar across signal types, it is possible that the effort required to store and recall those items would differ depending on signal properties. Including a measure of response time also meant it was possible to examine whether differences in how long it takes to respond could explain anticipated differences in recall accuracy on short-term memory tasks for adults and children. Several studies have demonstrated that children are poorer at both item and order recall than adults, but none has examined whether that age effect is due to differences in how long it takes listeners to respond. The memory trace in the short-term buffer decays rapidly (Baddeley, 2000; Cowan, 2008). There is evidence that children are slower to respond than adults, but that evidence comes primarily from studies in which listeners were asked to perform tasks with large cognitive loads, such as ones involving mental rotation or abstract pattern matching (e.g. Fry & Hale, 1996; Kail, 1991). Consequently it is difficult to know the extent to which age-related differences for accuracy on memory tasks arises from generally slowed responding. This experiment addressed that issue.

Method

Listeners

Forty-eight adults between the ages of 18 and 40 years and 24 8-year-olds participated. Adults were recruited from the university community, so all were students or staff members. Children were recruited from local public schools through the distribution of flyers to children in regular classrooms. Twice the number of adults participated because going into this experiment it seemed prudent to test adults at two signal-to-noise ratios when stimuli were embedded in noise: one that was the same as the ratio used with children, and one that was 3 dB poorer. The 8-year-olds ranged in age from 7 years, 11 months to 8 years, 5 months. The flyers that were distributed indicated that only typically developing children were needed for the study, and there was no indication that any children with cognitive or perceptual deficits volunteered.

None of the listeners, or their parents in the case of children, reported any history of hearing or speech disorder. All listeners passed hearing screenings consisting of the pure tones .5, 1, 2, 4, and 6 kHz presented at 25 dB HL to each ear separately. Children were given the Goldman Fristoe 2 Test of Articulation (Goldman & Fristoe, 2000) and were required to score at or better than the 30th percentile for their age in order to participate. In fact, all children were error free. All children were also free from significant histories of otitis media, defined as six or more episodes during the first three years of life. Adults were given the reading subtest of the Wide Range Achievement Test 4 (Wilkinson and Robertson, 2006) and all demonstrated better than a 12th-grade reading level.

Equipment and materials

All testing took place in a soundproof booth, with the computer that controlled stimulus presentation in an adjacent room. Hearing was screened with a Welch Allyn TM262 audiometer using TDH-39 headphones. Stimuli were stored on a computer and presented through a Creative Labs Soundblaster card, a Samson headphone amplifier, and AKG-K141 headphones. This system has a flat frequency response and low noise. Custom-written software controlled the audio and visual presentation of the stimuli. Order of items in a list was randomized by the software before each presentation. Computer graphics (presented at 200 x 200 pixels) were used to represent each word, letter, number and environmental sound. In the case of the first three of these, a picture of the word, letter or number was shown. In the case of environmental sounds, the picture was of the object that usually produces the sound (e.g., a whistle for the sound of a whistle).

Stimuli

Four sets of stimuli were used for testing. These were eight environmental sounds (ES) and eight non-rhyming consonant-vowel-consonant nouns, presented in 3 different ways: (1) as unprocessed, natural productions (UP); (2) as amplitude envelopes by creating 8-channel noise-vocoded versions of those productions (AE); and (3) as the natural productions presented in noise at 0 dB or −3 dB signal-to-noise ratios (NOI). These specific settings for signal processing had resulted in roughly 60 to 80 percent correct recognition in earlier studies (Eisenberg et al., 2000; Nittrouer & Boothroyd, 1990), and pilot testing showed that with very little training adults and 8-year-olds recognized words in a closed-set format 100 percent of the time.

All stimuli were created with a sampling rate of 22.05 kHz, 10-kHz low-pass filtering and 16-bit digitization. Word samples were spoken by a man, who recorded five samples of each word in random order. The words were ball, coat, dog, ham, pack, rake, seed, and teen. Specific tokens to be used were selected from the larger pool so that words matched closely in fundamental frequency, intonation, and duration. All were roughly 500 ms in length.

A MATLAB routine was used to create the 8-channel AE stimuli. All signals were first low-pass filtered with a high-frequency cutoff of 8,000 Hz. Cutoff frequencies between channels were .4, .8, 1.2, 1.8, 2.4, 3.0, and 4.5 kHz. Each channel was half-wave rectified using a 160-Hz high-frequency cutoff, and results used to modulate white noise limited by the same band-pass filters as those used to divide the speech signal into channels.

The natural version of each word was also center-embedded in 980 ms of white noise with a flat spectrum at a 0-dB and −3-dB SNR.

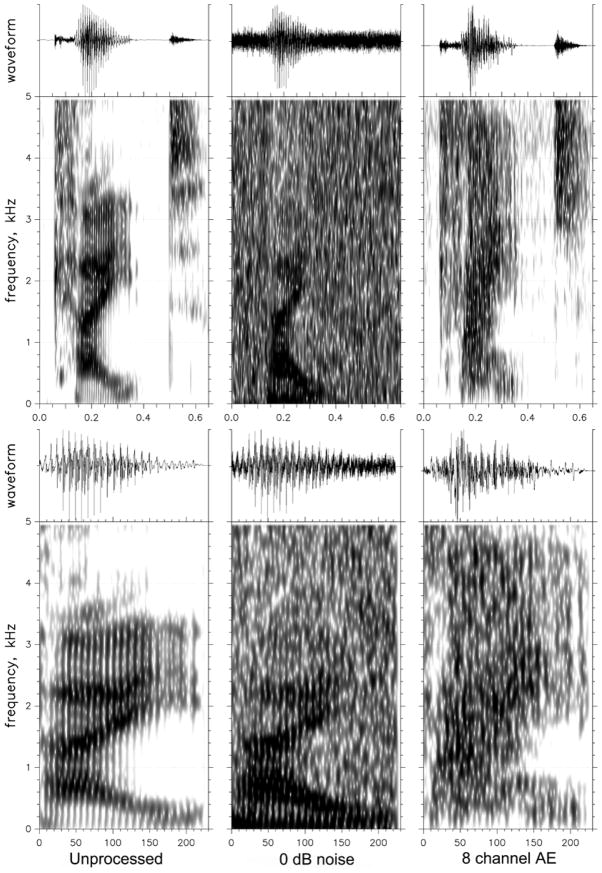

Spectrograms were obtained for a subset of the words in their UP, AE, and NOI conditions to glean a sense of what properties were preserved in the signal processing. Figure 1 shows waveforms and spectrograms of the word kite for these three conditions. The top waveforms and spectrograms show whole word files, and reveal that aspiration noise for the [k] and [t] releases were preserved by the AE signals, but not by the NOI stimuli. The bottom-most waveforms and spectrograms display only the vocalic portion of the word. These spectrograms reveal that neither formant structure nor temporal fine structure was well preserved in the AE signals, but both were rather well-preserved when words were embedded in noise (NOI signals). Figure 2 further highlights these effects. This figure shows both LPC and FFT spectra for 100 ms of the signal located in the relatively steady-state vocalic portion. Both the fine structure, particularly in the low frequencies, and the formants are well preserved for the NOI signal, but not for the AE version.

Figure 1.

Waveforms and spectrograms for the word kite in the natural, unprocessed form, embedded in noise at a 0 dB SNR, and as 8-channel amplitude envelopes. The top-most waveforms and spectrograms are for the entire word. Time is on the x-axis and is shown as seconds. The bottom-most waveforms and spectrograms are for the vocalic word portion only. This time axis is in milliseconds.

Figure 2.

LPC and FFT spectra for the same 100-ms section taken from the vocalic portion of the word kite.

The environmental sounds were selected to be sounds that occur within most people’s environment. These sounds were selected to differ from each other in terms of tonality, continuity, and overall spectral complexity. The specific sounds were a bird chirping, a drill, glass breaking, a helicopter, repeated knocking on a door, a single piano note (one octave above middle C), a sneeze, and a whistle being blown. These stimuli were all 500 ms long.

Samples of eight non-rhyming letters (F, H, K, L, Q, R, S, Y) were used as practice. These were produced by the same speaker who produced the word samples. The numerals 1 through 8 were also used for practice, but these were not presented auditorily, so digitized audio samples were not needed.

Eight-year-olds were tested using six instead of eight stimuli in each condition in order to equate task difficulty across the two listener groups. The words teen and seed were removed from the word conditions, the sneeze and helicopter sounds were removed from the sound condition, and the letters K and L and numerals 7 and 8 were removed from the practice conditions.

Procedures

All testing took place in a single session of roughly 45 minutes to an hour. The screening procedures were always administered first, followed by the serial recall task. Items in the serial recall task were presented via headphones at a peak intensity of 68 dB SPL. The experimenter always sat at 90 degrees to the listener’s left. A 23-in. widescreen monitor was located in front of the listener, 10 in. from the edge of the table, angled so that the experimenter could see the monitor as well. A wireless mouse on a mousepad was located on the table between the listener and the monitor, and was used by the listener to indicate the order of recall of word presentation. The experimenter used a separate wired mouse when needed to move between conditions. Pictures representing the letters, words, or environmental sounds appeared across the top of the monitor after the letters, words, or sounds were played over the headphones. After the pictures appeared, listeners clicked on them in the order recalled. As each image was clicked, it dropped to the middle of the monitor, into the next position going from left to right. The order of pictures could not subsequently be changed. Listeners had to keep their hand on the mouse during the task, and there could be no articulatory movement of any kind (voiced or silent) between hearing the items and clicking all the images. Software recorded both the order of presentation and the listener’s answers, and calculated how much time elapsed between the end of the final sound and the click on the final image.

Regarding the NOI condition, children heard words at only 0 dB SNR. Two groups of adults participated in this experiment, with each group hearing words at one of the two SNRs: 0 dB or −3 dB. Nittrouer and Boothroyd (1990) had found consistently across a range of stimuli that recognition accuracy for adults and children was equivalent when children had 3 dB more favorable SNRs than adults, so this procedure was implemented to see if maintaining this difference would have a similar effect on processing beyond recognition.

Because there were four types of stimuli (UP, NOI, AE, and ES) there were 24 possible orders in which these stimulus sets could be presented. One adult or child was tested on each of these possible orders, mandating the sample sizes used. Again, adults were tested with either a 0 dB or a −3 dB SNR, doubling the number of adults needed. Testing with each stimulus type consisted of ten lists, or trials, and the software generated a new order for each trial.

Before the listener entered the soundproof booth, the experimenter set up the computer so that stimulus conditions could be presented in the order selected for that listener. The first task during testing was a control task for the response time measure. Colored squares with the numerals 1 through 8 (or in the case of 8-year-olds, 1 through 6) were displayed in a row in random order across the top of the screen. The listener was instructed to click on the numerals in order from left to right across the screen. The experimenter demonstrated one time, and then the listener performed the task four times as practice. Listeners were instructed to keep their dominant hands on the wireless mouse and to click the numbers as fast as they comfortably could. After this practice session, the listener performed the task five times so a measure could be obtained of the time required for the listener to click on the number of items to be used in testing. The mean time it took for the listener to click on the numbers from left to right was used to obtain a ‘corrected’ response time during testing.

Next the listener was instructed to click the numerals in numerical order, as fast as they comfortably could. This was also performed five times, and was done to provide practice clicking on images in an order other than left to right.

The next task was practice with test procedures using the letter strings. The experimenter explained the task, and instructed the listener not to talk or whisper during it. The list of letters was presented over headphones and then the images of the letters immediately appeared in random order across the top of the screen. The experimenter demonstrated how to click on each in the order heard as quickly as possible. The listener was then provided with nine practice trials. Feedback regarding accuracy of recall was not provided, but listeners were reminded, if need be, to keep their hands on the mouse during stimulus presentation and to refrain from any articulatory movements until after the reordering task was completed.

The experimenter then moved to the first stimulus type to be used in testing, and made sure the listener recognized each item. To do this with words, all images were displayed on the screen, and the words were played one at a time over the headphones. After each word was played, the experimenter repeated the word and then clicked on the correct image. The software then displayed the images in a different order, and again played each word one at a time. After each presentation the listener was asked to repeat the word and click on the correct image. Feedback was provided if an error in clicking or naming the correct image was made on the first round. On a second round of presentation, listeners were required to select and name all images without error. No feedback was provided this time. If a listener made an error on any item, that listener was dismissed. For listeners who were tested with the AE or NOI stimuli before the UP words, practice with the UP words was provided first, before practice with the processed stimuli. This gave all listeners an opportunity to hear the natural tokens before the processed stimuli.

This pre-test to make sure listeners recognized each item was done just prior to testing with each of the four stimulus sets. With the ES stimuli, however, the experimenter never gave the sounds verbal labels. When sounds were heard for the first time over headphones, each image was silently clicked. This was done explicitly to prevent listeners from using the name of the object making the sound to code these sounds in short-term memory. If a listener gave a sound a label, the experimenter corrected the individual, stating that the task should be conducted silently. Of course, there was no way to prevent listeners from doing so covertly.

Testing with ten trials of the items took place immediately after the pre-test with those items. After testing with each stimulus type, the labeling task described above was repeated to ensure that listeners had maintained correct associations between images and words or sounds through testing. If a listener was unable to match the image to the correct word or sound for any item, that individual’s data were not included in the analyses.

The software automatically compared order recall to word or sound orders actually presented, and calculated the number of errors for each list position (out of 10) and total errors (out of 80 or 60, depending on whether adults or children were tested). The software also recorded the time required for responding to each trial, and computed the mean time across the 10 trials within the condition. A corrected response time (cRT) for each condition was obtained for each speaker by subtracting the mean response time of the control condition from the mean response time for testing in each condition.

Results

All listeners were able to correctly recognize all items in all the processed forms, during both the pre-test and post-test trials, so data from all listeners were included in the statistical analyses.

Adults performed similarly in the NOI condition regardless of which SNR they heard: In terms of accuracy, they obtained 59% correct (15.7% SD) at 0 dB SNR and 57% (16.3% SD) correct at −3 dB SNR. In terms of response times, they took 4.09 sec (1.58 sec SD) at 0 dB SNR and 4.24 sec (1.73 sec SD) at −3 dB SNR. Two-sample t tests indicated that these differences were not significant (p > .10), so data were combined across the two adult groups in subsequent analyses.

Serial Position

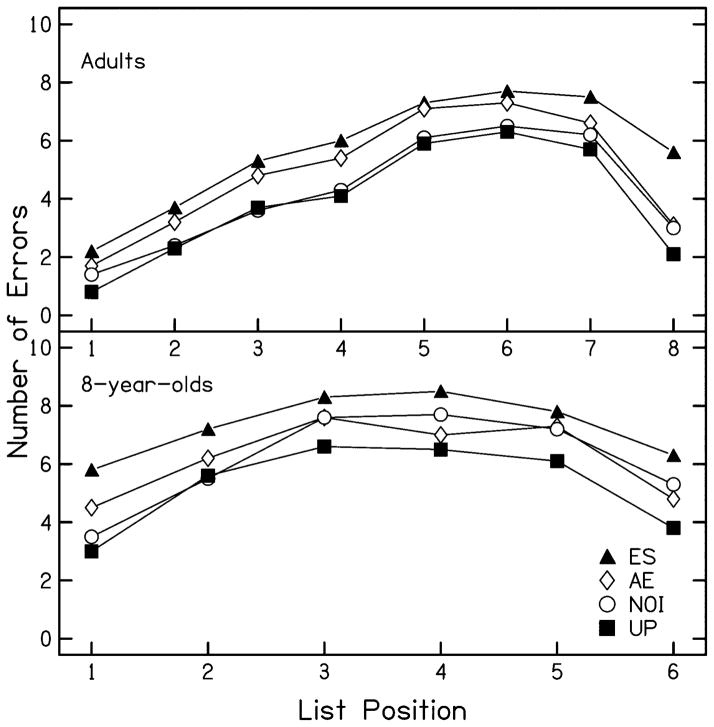

Figure 3 shows error patterns across list positions for each age group, for each stimulus condition. Overall, adults made fewer errors than 8-year-olds, and showed stronger primacy and recency effects. A major difference between adults and 8-year-olds was in the error patterns across conditions. For adults, there appears to be no difference between the UP and the NOI conditions, other than slightly stronger primacy and recency effects for the UP stimuli. Adults appear to have performed similarly for the ES and AE stimuli, until the final position where there was a stronger recency effect for the AE stimuli. Eight-year-olds appear to have performed similarly with the AE and NOI stimuli, and those scores fell between scores for the UP and ES stimuli. Only a slightly stronger primacy effect is evident for the NOI stimuli, compared to AE.

Figure 3.

Errors (out of 10 possible) for serial recall in Experiment 1 for all list positions in all conditions and by all listener groups.

Because adults and 8-year-olds did the recall task with different numbers of items, it was not possible to do an ANOVA on the numbers of errors for stimuli in each list position with age as a factor. Instead separate ANOVAs were done for each age group, with stimulus condition and list position as the within-subjects factors. Results are shown in Table 1. The main effects of condition and position were significant for both age groups. These results support the general observations that different numbers of errors were made across conditions, and that the numbers of errors differed across list positions. The findings of significant Condition × Position interactions reflect the slight differences in primacy and recency effects across conditions.

Table 1.

Outcomes of separate ANOVAs performed on adult and child data for stimulus condition and list position in Experiment 1.

| Source | df | F | p | η2 |

|---|---|---|---|---|

| Adults | ||||

| Condition | 3, 141 | 33.17 | <.001 | .07 |

| Position | 7, 329 | 197.89 | <.001 | .50 |

| Condition × Position | 21, 987 | 3.16 | <.001 | .01 |

|

| ||||

| 8-year-olds | ||||

| Condition | 3, 69 | 16.97 | <.001 | .12 |

| Position | 5, 115 | 67.00 | <.001 | .35 |

| Condition × Position | 15, 345 | 1.90 | .022 | .02 |

Correct Responding

To investigate differences across conditions more thoroughly, the sum of correct responses across list positions was computed for each condition, and transformed to percentage of correct items out of the total number presented (80 for adults and 60 for children). Table 2 shows mean percentages of items correctly recalled for each condition, for each age group. Adults scored 13–20 percentage points higher than 8-year-olds did. For both age groups, scores were highest for UP and lowest for ES, with scores for AE and NOI stimuli somewhere in between. A two-way ANOVA with age as the between-subjects factor and condition as the within-subjects factor supported these observations: Age, F (1, 70) = 35.08, p < .001, and condition, F (3, 210) = 43.94, p < .001, were both significant, but the Age × Condition interaction was not significant.

Table 2.

Percent correct responses across all list positions for adults and 8-year-olds for unprocessed (UP), speech in noise (NOI), 8-channel noise vocoded (AE) and environmental sound (ES) stimuli in Experiment 1. Standard deviations (SDs) are in parentheses.

| UP | NOI | AE | ES | |

|---|---|---|---|---|

| M (SD) | M (SD) | M (SD) | M (SD) | |

| Adults | 61.4 (12.4) | 58.2 (15.9) | 51.0 (12.1) | 43.4 (13.7) |

| 8-year-olds | 47.3 (16.7) | 38.5 (15.3) | 37.8 (12.7) | 26.7 (9.1) |

Although general patterns of results were similar for adults and children, age-related differences were found for the NOI and AE stimuli. As observed in Figure 3, adults’ scores for the UP and NOI conditions were nearly identical, while for 8-year-olds, scores on NOI and AE were nearly identical. These observations were confirmed by the results of a series of matched t tests, presented in Table 3. For adults, all comparisons were significant before Bonferroni corrects were applied, while for 8-year-olds, all comparisons were significant except for NOI vs. AE. Because the 4 conditions resulted in 6 comparisons, Bonferroni corrections were used, which meant that p had to be less than or equal to .00833 to be the equivalent of p < .05 for a one-comparison test. When these corrections were applied, the difference in adults’ scores for UP and NOI ceased to be significant.

Table 3.

Outcomes of matched t-tests performed on percent correct responses for adults and 8-year-olds separately in Experiment 1. For adults, df is 47; for 8-year-olds, df is 23. Precise p values are given for p < .10; Not Significant (NS) means p > .10.

| Source | t | p | Bonferroni |

|---|---|---|---|

| Adults: | |||

| UP vs. NOI | 2.13 | .04 | NS |

| UP vs. AE | 6.76 | <.001 | <.001 |

| UP vs. ES | 8.85 | <.001 | <.001 |

| NOI vs. AE | 3.61 | <.001 | <.01 |

| NOI vs. ES | 6.14 | <.001 | <.001 |

| AE vs. ES | 3.50 | .001 | <.01 |

|

| |||

| 8-year-olds | |||

| UP vs. NOI | 2.92 | .008 | <.05 |

| UP vs. AE | 3.63 | .001 | <.01 |

| UP vs. ES | 5.90 | <.001 | <.001 |

| NOI vs. AE | 0.33 | NS | NS |

| NOI vs. ES | 3.81 | .001 | <.01 |

| AE vs. ES | 4.08 | <.001 | <.01 |

Response Times

Response times were examined as a way of determining whether there were differences in the perceptual load introduced by the two kinds of processed stimuli. Table 4 shows mean cRTs for both groups in each condition. Adults’ response times appear to correspond to their accuracy scores in that the conditions in which they were most accurate show the shortest cRTs: Times were similar for the UP and NOI conditions, longer for AE, and longest for ES. Similarly, cRTs for 8-year-olds appear to correspond to their accuracy scores in that times were shortest for UP, similar for NOI and AE, and longest for ES. However, a series of matched t tests revealed a slightly more nuanced picture. These outcomes are shown in Table 5. For adults, the pattern described above was supported, before Bonferroni corrections were applied. However, once those corrections were applied, the differences between UP and AE and between AE and ES were no longer significant. For 8-year-olds, differences in response times between UP and NOI and UP and AE conditions did not reach statistical significance.

Table 4.

Mean corrected response times (in seconds) (cRT) for adults (8 items) and 8-year-olds (6 items) for all conditions in Experiment 1. SDs are in parentheses.

| UP | NOI | AE | ES | |

|---|---|---|---|---|

| M (SD) | M (SD) | M (SD) | M (SD) | |

| Adults | 4.23 (1.53) | 4.16 (1.64) | 4.70 (1.86) | 5.19 (1.91) |

| 8-year-olds | 2.80 (1.00) | 3.08 (1.16) | 3.14 (1.00) | 3.82 (1.10) |

Table 5.

Statistical outcomes of matched t-tests performed on mean cRTs for adults and 8-year-olds separately in Experiment 1. For adults, df is 47; for 8-year-olds, df is 23.

| Source | t | p | Bonferroni |

|---|---|---|---|

| Adults: | |||

| UP vs. NOI | .42 | NS | NS |

| UP vs. AE | 2.28 | .03 | NS |

| UP vs. ES | 3.95 | <.001 | <.01 |

| NOI vs. AE | 3.02 | .004 | <.05 |

| NOI vs. ES | 4.82 | <.001 | <.001 |

| AE vs. ES | 2.13 | .04 | NS |

|

| |||

| 8-year-olds | |||

| UP vs. NOI | 1.58 | NS | NS |

| UP vs. AE | 2.02 | .06 | NS |

| UP vs. ES | 5.68 | <.001 | <.001 |

| NOI vs. AE | .40 | NS | NS |

| NOI vs. ES | 3.44 | .002 | <.05 |

| AE vs. ES | 4.00 | .006 | <.01 |

Rate

It is unclear from response times shown in Table 4 whether adults have faster response times than children because the task for each group involved a different number of items. To deal with this discrepancy, rate was computed by dividing cRTs by the number of items in the task. Before examining those metrics, however, rate for the control condition was examined to get an indication of simple rates of responding for adults and children. In that condition, adults responded at a rate of .49 sec/item (.11 sec SD), while 8-year-olds were slightly slower, responding at a rate of .58 sec/item (.10 sec SD). This age effect was significant, F (1, 70) = 11.15, p = .001.

Table 6 shows mean rates for each condition for adults and 8-year-olds. Rates appear to be similar across the two age groups, and a two-way ANOVA with age as the between-subjects factor and condition as the within-subjects factor confirmed this observation: Condition was significant, F (3, 210) = 18.62, p <.001, but the age effect and the Age × Condition interaction were not significant. Thus, even though 8-year-olds were slightly slower at the control task, they responded at rates similar to those of adults during the test conditions.

Table 6.

Mean corrected rates (in seconds per item) for adults and 8-year-olds for all conditions in Experiment 1. SDs are in parentheses.

| UP | NOI | AE | ES | |

|---|---|---|---|---|

| M (SD) | M (SD) | M (SD) | M (SD) | |

| Adults | .53 (.19) | .52 (.21) | .59 (.23) | .65 (.24) |

| 8-year-olds | .47 (.17) | .51 (.19) | .52 (.17) | .64 (.18) |

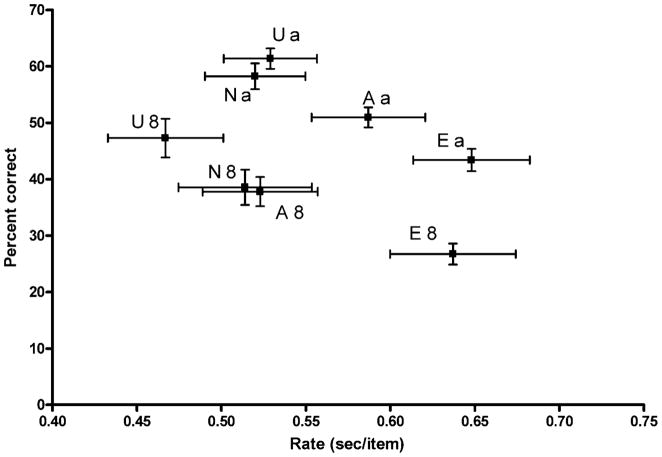

Rate and Accuracy

Finally, the question was addressed of whether rate of responding accounted for accuracy. Figure 4 shows the relationship between rate and accuracy. Overall, this graph reveals the general pattern of results. There is almost complete overlap in accuracy and rates for the NOI and AE stimuli for 8-year-olds. The strong correspondence in outcomes between the NOI and UP conditions for adults is also apparent. Furthermore, the differences in accuracy but similarity in rates between outcomes for children and adults are evident.

Figure 4.

The relationship between rate (in seconds per item) and percent correct responses in Experiment 1 in all conditions and by all listener groups. Error bars indicate standard errors of the mean. U=UP, N=NOI, A=AE, E=ES; a=Adults, 8=8-year-olds.

Some relationship between accuracy and rate of responding can be seen in the slightly negative slopes across the group x condition means shown in Figure 4. In order to examine this relationship more closely, correlations between rate and accuracy were computed in several ways: (a) for each age group and condition separately; (b) across all conditions for each age group separately; and (c) for both age groups within each condition.. None of these correlation coefficients was significant, so it seems fair to conclude that rate could not account for accuracy, even though the two showed similar trends across conditions.

Discussion

This first experiment was conducted to examine how adults and children would perform with degraded signals on a task involving a linguistic process more complex than simple word recognition. At issue was the possibility that even when listeners are able to recognize words presented as processed signals, that signal processing might negatively affect higher order linguistic processing.

Not surprisingly, children were generally less accurate at recalling item order than were adults. This age effect was consistent across conditions, but was not related to children having slower response times than adults. Although there was a significant difference in baseline response times between adults and children, it was small in size and response rates during testing were equivalent for adults and 8-year-olds. Furthermore, correlations between accuracy and rate were not found to be statistically significant.

One of the most important outcomes of this experiment was the finding that listeners – both adults and children – were less accurate and slower at responding with amplitude envelopes than with natural, unprocessed signals. This was true, even though all listeners demonstrated perfect accuracy for recognizing the items. Consequently it may be concluded that there is a “cost” to processing signals that lack acoustic cues and/or temporal fine structure, even when recognition of those signals is unhampered. At the same time, listeners in neither group performed as poorly or as slowly with the amplitude envelopes as with environmental sounds. That outcome means there must be some benefit to short-term memory for linguistically significant over non-speech acoustic signals, even if the acoustic cues traditionally thought to support recovery of that structure are greatly diminished.

Several interesting outcomes were observed when it comes to speech embedded in noise. First, it was observed that adults’ performance was the same across SNRs differing by 3 dB, a difference that has been shown to affect accuracy of open-set word recognition by roughly 20 percent (Boothroyd & Nittrouer, 1988). Thus it might be concluded that as long as listeners can recognize the words, further linguistic processing is not affected. Of course, that conclusion might be challenged based on the fact that adults performed differently on order recall for speech in noise and amplitude envelopes, even though they could recognize the words in both conditions. The primary difference between these two conditions is that temporal fine structure was still available in the noise-embedded stimuli. Apparently that structure had a protective function for adults’ processing of signals on this linguistic task, a finding that has been reported by others (e.g., Lorenzi, Gilbert, Carn, Garnier & Moore, 2006).

The second interesting result observed for the speech-in-noise signals was that there was a very distinct difference in how adults and children performed with these signals. Adults’ performance was similar with these signals to their performance with unprocessed signals, although not quite identical. It was only when Bonferroni corrections were applied that statistical significance in accuracy of responding for these two conditions disappeared. Nonetheless, it seems fair to conclude that as long as adults could recognize these signals in noise there was little decrement in linguistic processing. Children, however, showed a decrement in performance for speech in noise equal in magnitude to that observed for amplitude envelopes. So, children were unable to benefit from the presence of temporal fine structure in the way that adults did.

There is, however, an objection that might be raised to both general conclusions that (1) adults were less affected by signals embedded in noise than by amplitude envelopes and (2) children were more deleteriously affected than adults by noise masking. That objection is that there really is no good way to assign a handicapping factor, so to speak, to different signal types or to the same signal type across listener age. Consequently there is no way to know whether the same degree of uncertainty was introduced by these different conditions of signal degradation and if that uncertainty was similar in magnitude for adults and children. The only available evidence to address those concerns comes from earlier studies (Eisenberg et al., 2000; Nittrouer & Boothroyd, 1990), which suggest that adults and 8-year-olds might reasonably be expected to perform similarly on open-set word recognition with 8-channel vocoded stimuli and stimuli in noise at the levels used here.

In summary, this experiment revealed some interesting trends regarding the processing of acoustic speech signals. When acoustic cues and temporal fine structure were diminished, linguistic processing above and beyond recognition was deleteriously affected for both adults and children. For adults this effect appeared to be due primarily to the diminishment of acoustic cues; adults seemed to benefit from the continued presence of temporal fine structure. Children performed similarly with amplitude envelopes and speech embedded in noise, suggesting that children might simply be negatively affected by any signal degradation. Such degradation may create a kind of informational masking for children that is not present for adults. However, the current experiment on its own could not provide conclusive evidence concerning what it is about amplitude envelopes that accounted for the decrements in performance seen for adults and children. Neither was this experiment on its own able to shed light on the extent to which listeners were able to recover explicitly phonetic structure from the acoustic speech signal, and so the extent to which observed effects on recall might have been due to hindrances in using phonetic structure for coding and retrieving items from a short-term memory buffer. The next experiment was undertaken to examine the extent to which recovery of phonetic structure is disrupted for adults and children with these signal processing algorithms. This information could help to determine if the negative effects observed for short-term memory can be directly attributed to problems recovering that structure.

EXPERIMENT 2: RECOVERING PHONETIC STRUCTURE

The main purpose of this second experiment was to determine if the patterns of results observed in Experiment 1 were associated with listeners’ abilities to recover phonetic structure from the signals they were hearing. To achieve that goal, a task requiring listeners to attend explicitly to phonetic structure within words was used. Some tasks requiring attention to that level of structure require only implicit sensitivity; these are tasks such as non-word repetition (e.g., Dillon & Pisoni, 2001). Others require explicit access of phonemic units, such as when decisions need to be rendered regarding whether test items share a common segment (e.g., Colin, Magnan, Ecalle & Leybaert, 2007). The latter sort of task was used here, and the specific task used is known as Final Consonant Choice (FCC). This task requires listeners to render a judgment of which word, out of a choice of three, ends with the same final consonant as a target word. It has been used previously (Nittrouer, 1999; Nittrouer, Shune & Lowenstein, 2011), and consists of 48 trials. As with Experiment 1, the stimuli were processed as amplitude envelopes and as speech in noise. Unlike Experiment 1, each listener heard words with only one of the processing strategies, as well as in their natural, unprocessed form. This design was due to the fact that dividing the 48 trials in the task across three conditions would have resulted in too few trials per condition.

Based on the findings of Experiment 1, it could be predicted that adults would show similarly accurate and fast responses for the unprocessed stimuli and words in noise. A decrement in performance with amplitude envelopes would be predicted for adult listeners. Children would be expected to perform less accurately than adults overall. It would be expected that children would perform best for the unprocessed stimuli, and show similarly diminished performance with both the amplitude envelopes and words in noise. Children were not necessarily expected to be slower to respond than adults.

Although these predictions are based on outcomes of Experiment 1, data collection for the two experiments actually occurred simultaneously, but on separate samples of listeners. All listeners in each group needed to meet the same criteria for participation, and both groups consisted of typical language users. Listeners were randomly assigned to each experiment. Consequently, the groups were considered to be equivalent across experiments, so results could be compared across experiments. It would have been desirable to use the same listeners in both experiments, but the designs of the experiments militated against doing so. In particular, listeners in this second experiment, on phonemic awareness, could only hear stimuli in one processed condition, amplitude envelopes or speech in noise, without decreasing the numbers of stimuli in each condition too greatly. In the experiment on short-term memory, listeners heard stimuli processed in both manners. There seemed no good way to control for the possible effects of unequal experience with the two kinds of signals across experiments, so the decision was made to use separate samples of listeners.

Finally, this second experiment was designed to measure differences in phonetic recovery among signal processing conditions, and not phonological processing abilities per se. Therefore, it was important to include only listeners with typical (age-appropriate) sensitivities to phonetic structure. To make sure that the 8-year-olds had phonological processing abilities typical for their age, they completed a second phonemic awareness task, Phoneme Deletion (PD), with unprocessed speech only. In this task, the listener is required to provide the real word that would derive if a specified segment were removed from a nonsense syllable. This task is more difficult than the FCC task because the listener not only has to access the phonemic structure of an item, but also has to remove one segment from that structure, and blend the remaining parts. Including 8-year-olds who scored better than one standard deviation below the mean for their age from previous studies (Nittrouer, 1999; Nittrouer et al., 2011) provided assurance that all had typical phonological processing abilities. Adults were assumed to have typical phonological processing abilities, both because none reported any history of language problems and because all read at better than a 12th grade level.

Method

Listeners

Forty adults between the ages of 18 and 40 years and 49 eight-year-olds participated. The 8-year-olds ranged in age from 7 years; 11 months to 8 years; 5 months. All listeners were recruited in the same manner and met the same criteria for participation as those described in Experiment 1. Additionally the 8-year-olds in this experiment were given the Peabody Picture Vocabulary Test – 3rd Edition (PPVT-III) (Dunn & Dunn, 1997) and were required to achieve a standard score of at least 92 (30th percentile). Eight-year-olds also completed a PD task. This task was used in previous studies (Nittrouer, 1999; Nittrouer et al., 2011) where it was found that typically developing 2nd graders scored a mean of 24.8 items correct (6.2 SD) out of 32 items. The 8-year-olds in this study were required to achieve a score of at least 18 correct, which is one standard deviation below that mean, in order to participate.

Equipment and Materials

Equipment was the same as that described in Experiment 1. Custom written software controlled the audio presentation of the stimuli. Children used a piece of paper printed with a 16-square grid and a stamp as a way of keeping track of where they were in the stimulus training (see Procedures section below).

Stimuli

The FCC task consisted of 48 test trials and six practice trials. Words are listed in Appendix A. These words were spoken by a man, who recorded the samples in random order. The FCC words were presented in three different ways: as unprocessed, natural productions (UP), 8-channel noise vocoded versions of those productions (AE), and natural productions presented in noise at 0 dB or −3 dB SNR (NOI). The AE and NOI stimuli were created using the same methods as those used in Experiment 1. All stimuli were presented at a sampling rate of 22.05 kHz with 10-kHz low-pass filtering and 16-bit digitization.

Appendix A.

Items from the final consonant choice (FCC) task. The target word is given in the left column, with the three choices in the right columns. The correct response is shown first here and is italicized, but order of presentation of the three choices was randomized for each listener.

| Practice Items | |||||||

| 1. Rib | Mob | Phone | Heat | 2. Stove | Cave | Hose | Stamp |

| 3. Hoof | Tough | Shed | Cop | 4. Lamp | Tip | Rock | Juice |

| 5. Fist | Hat | Knob | Stem | 6. Head | Rod | Hem | Fork |

| Test Items | |||||||

| 1. Nail | Bill | Voice | Chef | 2. Car | Stair | Foot | Can |

| 3. Hill | Bowl | Moon | Hip | 4. Pole | Land | Poke | |

| 5. Chair | Deer | Slide | Chain | 6. Door | Pear | Food | Dorm |

| 7. Gum | Lamb | Shoe | Gust | 8. Doll | Wheel | Pig | Beef |

| 9. Dime | Broom | Note | Cube | 10. Train | Van | Grade | Cape |

| 11. Home | Drum | Mouth | Prince | 12. Comb | Room | Cob | Drip |

| 13. Pan | Skin | Grass | Beach | 14. Spoon | Fin | Cheese | Back |

| 15. Thumb | Cream | Tub | Jug | 16. Bear | Shore | Rat | Clown |

| 17. Ball | Pool | Clip | Steak | 18. Rain | Yawn | Sled | Thief |

| 19. Hook | Neck | Mop | Weed | 20. Truck | Bike | Trust | Wave |

| 21. Boat | Skate | Bone | Frog | 22. Mud | Crowd | Mug | Dot |

| 23. Hive | Glove | Hike | Light | 24. Leaf | Roof | Leak | Suit |

| 25. Bug | Leg | Bus | Rope | 26. Cup | Lip | Plate | Trash |

| 27. House | Kiss | Mall | Dream | 28. Fish | Brush | Shop | Gym |

| 29. Meat | Date | Camp | Sock | 30. Duck | Rake | Song | Bath |

| 31. Kite | Bat | Mouse | Grape | 32. Nose | Maze | Goose | Zoo |

| 33. Cough | Knife | Log | Dough | 34. Dress | Rice | Noise | Tape |

| 35. Crib | Job | Hair | Wish | 36. Flag | Rug | Step | Cook |

| 37. Worm | Team | Soup | Price | 38. Wrist | Throat | Risk | Store |

| 39. Sand | Kid | Sash | Flute | 40. Hand | Lid | Hail | Run |

| 41. Milk | Block | Mitt | Tail | 42. Vest | Cat | Star | Mess |

| 43. Ant | Gate | Fan | School | 44. Desk | Lock | Tube | Path |

| 45. Barn | Pin | Night | Tag | 46. Box | Face | Mask | Book |

| 47. Park | Lake | Bed | Crown | 48. Horse | Ice | Lunch | Bag |

For the PD task there were 32 test items and six practice items, all recorded by the same speaker as the FCC words. These words are shown in Appendix B.

Appendix B.

Items from the phoneme deletion (PD) task. The segment to be deleted is in parentheses. The correct response is found by removing the segment to be deleted.

| Practice Items | |

| 1. pin(t) | 2. p(r)ot |

| 3. (t)ink | 4. no(s)te |

| 5. bar(p) | 6. s(k)elf |

| Test Items | |

| 1. (b)ice | 2. toe(b) |

| 3. (p)ate | 4. ace(p) |

| 5. (b)arch | 6. tea(p) |

| 7. (k)elm | 8. blue(t) |

| 9. jar(l) | 10. s(k)ad |

| 11. hil(p) | 12. c(r)oal |

| 13. (g)lamp | 14. ma(k)t |

| 15. s(p)alt | 16. (p)ran |

| 17. s(t)ip | 18. fli(m)p |

| 19. c(l)art | 20. (b)rock |

| 21. cream(p) | 22. hi(f)t |

| 23. dril(k) | 24. mee(s)t |

| 25. (s)want | 26. p(l)ost |

| 27. her(m) | 28. (f)rip |

| 29. tri(s)ck | 30. star(p) |

| 31. fla(k)t | 32. (s)part |

Procedures

The arrangement of the listener and experimenter in the test booth differed for this experiment from the first. Instead of the experimenter being at a 90-degree angle to the listener, as was the case in Experiment 1, the experimenter sat across the table from the listener. The keyboard used by the experimenter to control stimulus presentation and record responses was lower than the tabletop, so the listener could not see what the experimenter was entering.

Adults were tested in a single session of 45 minutes, and 8-year-olds were tested in one session of 45 minutes and one session of 30 minutes over two days. The first session was the same for adults and 8-year-olds. The screening procedures (hearing screening and the WRAT or Goldman-Fristoe) were administered first. Then the listener was trained with either the AE or NOI stimuli. Half of the listeners heard the AE stimuli and half heard the NOI stimuli. Adults heard the NOI stimuli at a −3 dB SNR, and 8-year-olds heard the NOI stimuli at a 0 dB SNR. Adults were tested at only one SNR here because equating abilities across age groups to recognize stimuli was presumed to be critical in this experiment with so many stimuli; the task seemed closer to open-set recognition than the task in the first experiment. Again, adults achieve similar open-set recognition scores to children with 3 dB poorer SNRs (Nittrouer & Boothroyd, 1990).

The training consisted of listening to and repeating each of the 192 words to be used, first in its unprocessed form and then in its processed form. The purpose of this training was to give listeners opportunity to become acquainted with the kind of processed signal they would be hearing during testing; it was not meant to teach each word explicitly in its processed form. Listeners were told they would be learning to understand a robot’s speech. Eight-year-olds stamped a square in a 16-square grid every time 12 unprocessed-processed word pairs were presented, just to give them an idea of how close to completion they were.

After training, a 10 word repetition task was administered in order to determine the mean time it took the listener to repeat a single word. The software randomly picked 10 unprocessed words from the FCC word list. The experimenter instructed the listener to repeat each word as soon as possible after it finished playing. The experimenter pressed the space bar to play each word, and then pressed the space bar again as soon as the listener started to say the word. The time between the offset of each word and the experimenter’s space-bar press served as a measure of response time. These 10 response times were averaged for each listener as a control for measures of response time made during testing. It served as an indication of the mean time it took for the listener to respond simply by repeating a word and for the experimenter to press the space bar.

The decision was made to have the experimenter mark the end of the response interval rather than using an automated method, such as a voice key, because of the difficulties inherent in testing children. Their voices tend to be breathy and/or soft, which requires threshold sensitivity to be set low. At the same time, children often fidget or make audible noises such as loud breathing, all of which can trigger a voice key, especially when threshold to activation is low. Consequently it was deemed preferable to use an experimenter-marked response interval. In this case, the same individual (the second author) collected all data, so response time was collected with only one judge. She kept her finger near the spacebar, so she could respond quickly. In any event, the time it generally took for her to hit that spacebar was calculated into the time that would be used as a correction for response times collected during testing.

After collecting measurements for corrected time, the experimenter told the listener the rules of the FCC task. The listener was instructed to repeat a target word (“Say _____”), and then to listen to the three choice words and report as quickly as possible which one had the same ending sound as the target word. The listener was told to pay attention to the sounds, and not how the words were spelled. The experimenter presented three practice trials by live voice, and provided feedback to the listener. The experimenter then started the practice module of the FCC software. The six practice items were presented in natural, unprocessed form. The program presented the target word in the carrier phrase “Say _____”. After the listener repeated the word, the three word choices were presented. The listener needed to say which of the three words ended in the same sound as the target word as quickly as possible. For these practice items, the listener was given specific, detailed feedback if needed. Then testing was conducted with the computerized program and digitized samples. No feedback was given during testing.

The software randomly presented half of the 48 stimuli in the processed condition (AE or NOI) and half in the UP condition in random order, with the stipulation that no more than two items in a row could be from the same condition. The word “Say” was always presented in the unprocessed form. For the AE or NOI stimuli, listeners were given three chances to repeat the target word exactly. If they did not repeat the processed target word exactly after three tries, the experimenter told them the word and they said it. The experimenter then pressed a key on the keyboard that triggered the playing of the three word choices. These words were never repeated. The experimenter hit the space bar as soon as the listener started to vocalize an answer. The time between the offset of the third word and the listener’s initiation of a response was recorded by the software. The experimenter recorded whether the listener’s response was correct or incorrect in the software.

The measures collected by the software were used to calculate for each listener the percentage of correct answers, mean overall response time, and mean response time for correctly answered items and incorrectly answered items for the processed and unprocessed conditions separately. For each listener, a corrected response time (cRT) for each condition was obtained by subtracting the mean time of the ten control trials from the mean actual response time. A corrected response time for correctly answered items (cCART) and incorrectly answered items (cWART) was obtained in the same way.

On the second day, 8-year-olds were given the PPVT-III and were tested on the PD task. Although these tasks involved inclusionary criteria for this experiment, they were given after the FCC task so the FCC test procedures would be the same for adults and children. If the PD task had been given first to children, they would have had more practice with phonemic awareness tasks than adults. When the PD task was introduced, the experimenter first explained the rules, and gave examples via live voice. In this task, the listener repeats a nonsense word, and then is asked to say that word without one of the segments, or “sounds,” a process that turns it into a real word. For example, “Say plig. Now say plig without the ‘L’ sound.” The correct real word in this case would be pig. Six practice trials were provided, and the listener was given specific feedback if needed. Testing was then conducted with the 32 PD items. No feedback was given during testing. Response time was not recorded for this task. The listener was given three chances to repeat the nonsense word correctly. If they could not repeat it correctly, the experimenter recorded that the listener was unable to repeat it, and moved on to the next nonsense word. That item was consequently scored as incorrect. If the listener repeated the nonsense word correctly, the program then played the sound deletion cue (“Now say ____ without the ____ sound.”) The experimenter either entered that they listener said the correct real word, or typed the word that was said into the computer interface, and it was scored as incorrect.

Results

Nine 8-year-olds scored lower than 18 items correct on the PD task, so their data were excluded from the study, leaving 40 8-year-olds. Those 8-year-olds scored a mean of 26.4 items correct (3.3 SD) on the PD task, similar to the mean of 24.8 (6.2 SD) for typical second graders in Nittrouer et al. (2011). The mean PPVT-III standard score across the 40 8-year-olds included in the study was 115 (10 SD), which corresponds to the 84th percentile.

All listeners were able to correctly repeat all the AE and NOI stimuli during the training with the 192 words that were used in testing. Listeners generally repeated all words correctly when presented as targets during testing, as well. The most words any listener needed to have presented by the experimenter were 3, with a mean of 1.2 across listeners. In all cases this was due to small errors in vowel quality, so none of these errors would have impacted listeners’ abilities to make consonant judgments, had the experimenter not told them the target. Nonetheless, the option of excluding results from trials on which the listener was unable to correctly repeat the target was considered, so scores for percent correct on the overall FCC task were compared with those trials included and excluded. The greatest difference in scores occurred for 8-year-olds listening to NOI stimuli, and that difference was only 1.31 percentage points. All statistics were run with and without results for these trials, and no differences in results were found. Consequently the decision was made to report results with all trials included.

Correct Responding

Table 7 shows the percentage of correct answers for adults and children for each condition. Both groups of listeners (AE and NOI) had nearly identical scores for the UP stimuli, with adults scoring 15 to 19% better than 8-year-olds. Because adults scored above the 90th percentile with the UP and AE stimuli, arcsine transforms were used in all statistical analyses. A two-way ANOVA with age and condition as between-subjects factors was done on results from the UP stimuli for listeners in the two condition groups to ensure there were no differences in results for those stimuli. That analysis was significant for age, F (1,76) = 57.54, p < .001, but not significant for condition or the Age × Condition interaction (p > .10). This confirms observations that 8-year-olds made more errors than adults, but there was no difference between listeners as a function of which kind of processed stimuli they heard.

Table 7.

Percent correct responses for adults and 8-year-olds for unprocessed (UP), speech in noise (NOI), and 8-channel noise vocoded (AE) stimuli in Experiment 2. SDs are in parentheses.

| AE condition | NOI condition | |||

|---|---|---|---|---|

| UP | AE | UP | NOI | |

| M (SD) | M (SD) | M (SD) | M (SD) | |

| Adults | 90.0 (5.5) | 91.5 (6.0) | 93.1 (5.3) | 84.6 (8.7) |

| 8-year-olds | 74.5 (13.0) | 70.4 (12.1) | 73.8 (12.3) | 62.5 (16.2) |

Scores for the AE condition appear similar to UP scores, but scores for the NOI condition were about 11% lower than for UP, for both adults and 8-year-olds. A two-way ANOVA was performed on scores for these processed stimuli with age and condition as between-subjects factors. The main effect of age was significant, F (1, 76) = 77.23, p < .001, as was the main effect of condition, F (1, 76) = 10.66, p = .002, confirming that listeners performed differently with the two kinds of processed stimuli. The Age × Condition interaction was not significant, indicating that the difference across conditions was similar for both age groups.

Finally, scores were compared for the UP vs. processed stimuli (AE or NOI) for each age group. Looking first at the AE condition, matched t tests performed on the UP vs. AE scores for each age group separately were not significant. However, differences in scores for the UP vs. NOI stimuli were statistically significant for both adults, t (19) = 4.41, p<.001, and for 8-year-olds, t (19) = 2.71, p = .014. For this sort of phonemic awareness task, then, performance was negatively affected by signals being embedded in noise, but not by being processed as amplitude envelopes. That was true for adults, as well as for 8-year-olds.

Response Time for all trials

For the 10 control trials, the mean time for repeating words was .26 sec (.08 sec SD) for adults and .31 sec (.08 sec SD) for 8-year-olds. Even though this difference was small, .05 seconds, it was significant for age, F (1, 76) = 8.55, p = .005. Children responded more slowly than adults, but not by very much.

Table 8 shows mean cRTs for each group for selecting the word with the same final consonant as the target. For this measure, the difference between adults and 8-year-olds is striking: For the UP stimuli, adults took less than a second to respond while 8-year-olds took more than 4 seconds. These longer response times could indicate that children required greater cognitive effort to complete the task, especially considering that adults’ and children’s response times on the control task differed by only .05 sec. As with correct responding, cRTs for the UP stimuli were similar regardless of whether listeners additionally heard AE or NOI stimuli. This similarity was confirmed using a two-way ANOVA on cRTs for UP stimuli, with age and condition as factors: age was significant, F (1, 76) = 149.8, p < .001, but neither condition nor the Age × Condition interaction was significant.

Table 8.

Corrected response times (in seconds) (cRT) for adults and 8-year-olds for all stimuli in Experiment 2. SDs are in parentheses.

| AE condition | NOI condition | |||

|---|---|---|---|---|

| UP | AE | UP | NOI | |

| M (SD) | M (SD) | M (SD) | M (SD) | |

| Adults | .72 (.40) | 1.04 (.68) | .66 (.51) | 1.17 (.69) |

| 8-year-olds | 4.09 (1.60) | 4.13 (2.11) | 4.29 (1.89) | 4.44 (1.53) |

Next a two-way ANOVA was performed on cRTs for the two sets of processed stimuli. Age was significant, F (1, 76) = 104.56, p < .001, but neither condition nor the Age × Condition interaction was significant. This outcome means that even though listeners were more accurate with AE than with NOI stimuli, they were no faster to respond.

Response times for each age group were also examined separately. Adults had longer cRTs for the AE and NOI stimuli than they did for the UP stimuli. This was confirmed by matched t tests performed on cRTs for UP vs. AE stimuli, t (19) = 3.21, p = .005, and UP vs. NOI stimuli, t (19) = 4.25, p < .001. These longer response times for processed stimuli indicate that greater cognitive effort was required to complete the task when stimuli were processed in some way. For the AE stimuli, these results mean that even though adults responded with the same level of accuracy as for the UP stimuli, it required greater effort. For the NOI stimuli, adults were both less accurate and slower than with the UP stimuli.

Eight-year-olds did not show any significant differences in cRTs for the AE and NOI stimuli, compared to the UP stimuli. This was confirmed by non-significant results for matched t-tests performed on cRTs for UP vs. AE stimuli and UP vs. NOI stimuli. These results for 8-year-olds suggest that recovering explicitly phonetic structure from the acoustic speech signal is something that is intrinsically effortful for children, even for natural, unprocessed stimuli.

Response Time for Correct and Incorrect Answers

In addition to looking at overall response times, response times for correct answers only (cCART) and incorrect (wrong) answers only (cWART) were computed in order to examine the relative contributions of each to total response times (cRTs). Table 9 shows mean cCARTs for each age group. Adults remained faster than 8-year-olds, and cCARTs for UP stimuli appear similar across the two conditions. This was confirmed using a two-way ANOVA on cCARTs for UP stimuli with age and condition as factors: age was significant, F (1,76) = 125.49, p < .001, but neither condition nor the Age × Condition interaction was significant.

Table 9.

Corrected response times (in seconds) for correct answers only (cCART) for adults and 8-year-olds for all stimuli in Experiment 2. SDs are in parentheses.

| AE condition | NOI condition | |||

|---|---|---|---|---|

| UP | AE | UP | NOI | |

| M (SD) | M (SD) | M (SD) | M (SD) | |

| Adults | .56 (.39) | .93 (.57) | .52 (.42) | .80 (.52) |

| 8-year-olds | 3.10 (1.51) | 3.27 (1.84) | 3.03 (1.21) | 3.91 (1.98) |